When creating a site on hosting , it should be closed from indexing, otherwise non-optimized content and duplicate pages will immediately enter the search results. It is also necessary to take care of hiding the web resource from users, because when they go to an empty page, they will immediately go away, which will later negatively affect the ranking.

In this article, we will tell you how to hide a site during development and give step-by-step instructions for closing a web resource using the tools on Cityhost hosting.

How to hide a site during development using robots.txt

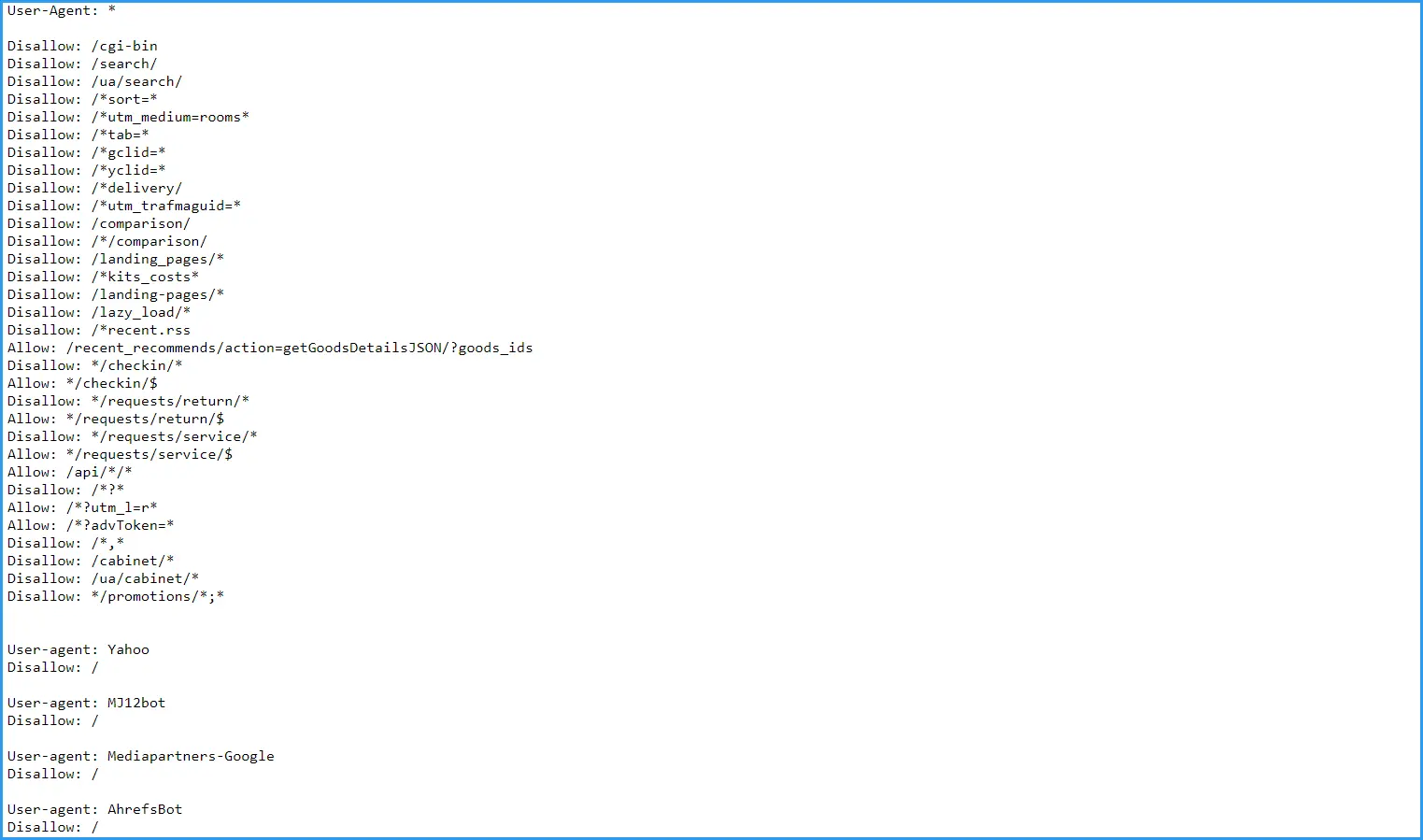

The robots.txt file is a text document designed to control the behavior of search robots (crawlers) when scanning an Internet project. They visit this file first, after which they follow prescribed instructions (directives): for example, some parts of the web resource are scanned and others are ignored.

You can use standard guidelines to partially or completely hide a site during development. In order to properly close a web resource from indexing, you need to know the basic directives of robots.txt:

User-agent — defines one or a group of robots that must follow the prescribed instructions;

Disallow — prohibits indexing certain pages or entire directories;

Allow — allows you to index pages or directories so that you can more precisely control the access of robots to individual information on the site;

Sitemap - Specifies the path to an XML Sitemap file (containing a list of all available pages), helping search engines index the web resource more efficiently.

Based on the directives described above, you can easily close the site from searches using the following combination of instructions:

User-agent: *

Disallow: /

In the given example, the symbol "*" after "User-agent" indicates that the "Disallow" directives apply to all robots. And the indication "Disallow: /" means that all pages and directories on the web resource are prohibited for indexing.

Many users have a question about how to hide a site from indexing, leaving access to one search engine. For this, consider the following example:

User-agent: *

Disallow: /

User-agent: Google

Allow: /

In this case, indexing will be available only for Google robots. You can specify any other search engine by replacing Google with its name.

Another useful tip:

User-agent: *

Disallow: /

Allow: /$

This directive allows you to close the Internet project from indexing, leaving the main page accessible. It is especially useful for new web resources, because you can easily configure the project, while search engines learn about its existence, accordingly, it will start gaining trust earlier.

Also Read: How to Increase Domain Authority: Checking and Improving DA Score

How to hide a site during development at the server level

Blocking a site from indexing via robots.txt is the most common, but not 100%, method. He only gives instructions, but whether to follow them or not is decided by a specific search robot. In some cases, search engines ignore prohibitions by indexing all data. This rarely happens, but exposing a young project to such a risk is extremely undesirable.

We recommend additionally blocking data from search engines at the server level. To do this, use the .htaccess file, which is located in the root directory of the web resource (if the document does not exist, create it). You need to add the following line to it:

Options -Indexes

You can also add a special code to the file that prevents certain robots from scanning. For example, consider how to close a site from Google search. Add the following directive to your .htaccess file:

RewriteEngine On

RewriteCond %{HTTP_USER_AGENT} UserAgentName [NC]

RewriteRule .* - [F,L]

Instead of UserAgentName, substitute the name of the bot — for Google, it's Googlebot.

By combining the two methods, you will get the maximum effect: robots.txt will ensure complete closure of the site, and .htaccess will provide an additional level of security. The only drawback is that you will have to edit important files of the web resource yourself, that is, there is a minimal risk of damaging it.

How to hide a site on WordPress

And now let's consider the data closing algorithm for sites developed on the popular WordPress CMS. The procedure itself can be divided into two stages: closing data from search robots and installing a plug for visitors.

How to prevent site indexing on WordPress

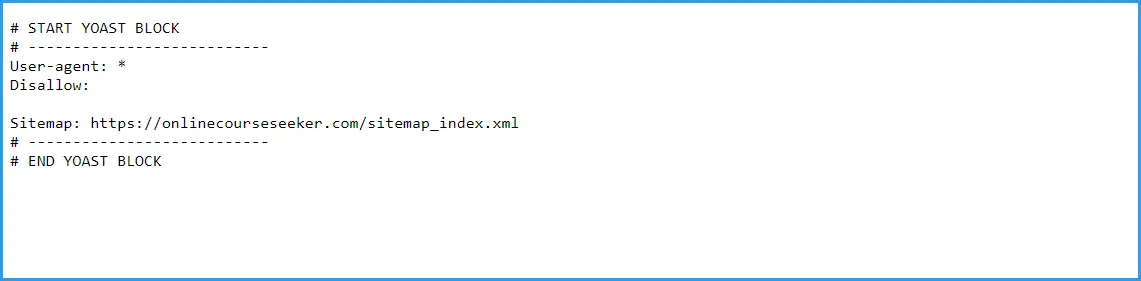

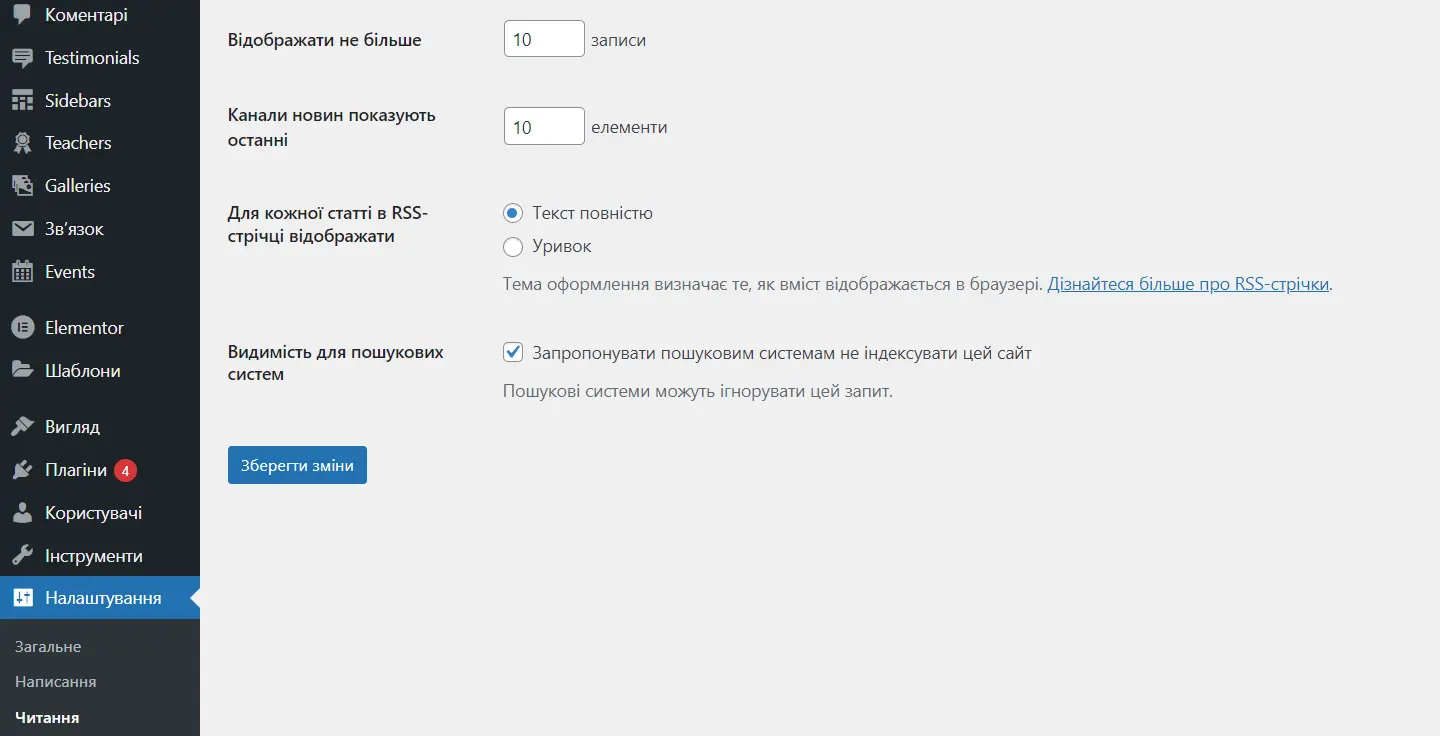

The developers of the well-known WordPress site management system provided an opportunity to hide data from crawlers, simplifying the process as much as possible.

In the control panel, go to the section "Settings" - "Reading", select the item "Ask search engines not to index the site" and click "Save changes".

The system will automatically edit the robots.txt file, and you will have to remember to remove the check mark before starting the project. If you use the Yoast SEO plugin, such a reminder will constantly appear when you enter the control panel.

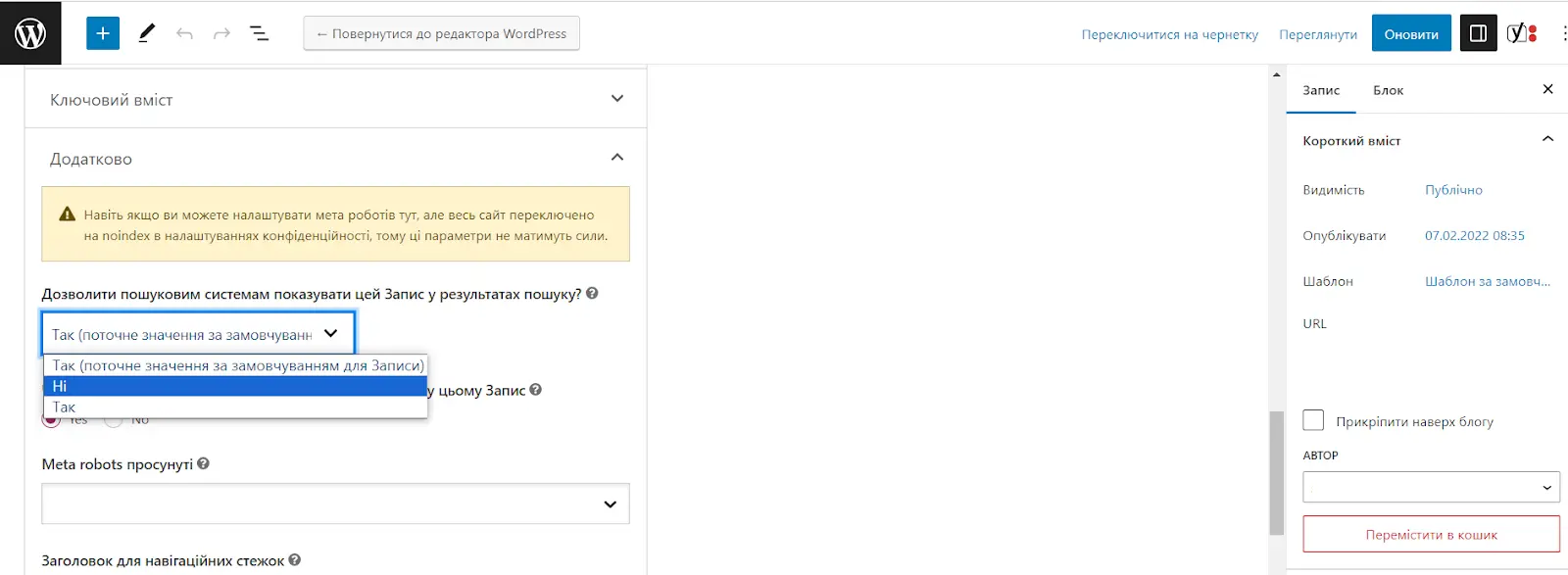

By the way, the Yoast SEO plugin is convenient to use to close individual pages from indexing. You just need to open the record (page) for editing and find the "Advanced" tab. Under "Allow search engines to show the entry in search results," select "No." You can also specify whether robots should follow the links in this post, and select "do not index images" from the "Meta Robots advanced setting" drop-down list.

Agree, it is much more convenient to check one box in the general settings than to edit the robots.txt file yourself. The only problem is that not all management systems make it as easy to hide data from search engines. But here you will already be helped by the functionality of Cityhost, which we will analyze further, but for now we will learn how to put an informative stub on WordPress.

Read also: How to protect a site on WordPress and not become a victim of open code vulnerabilities

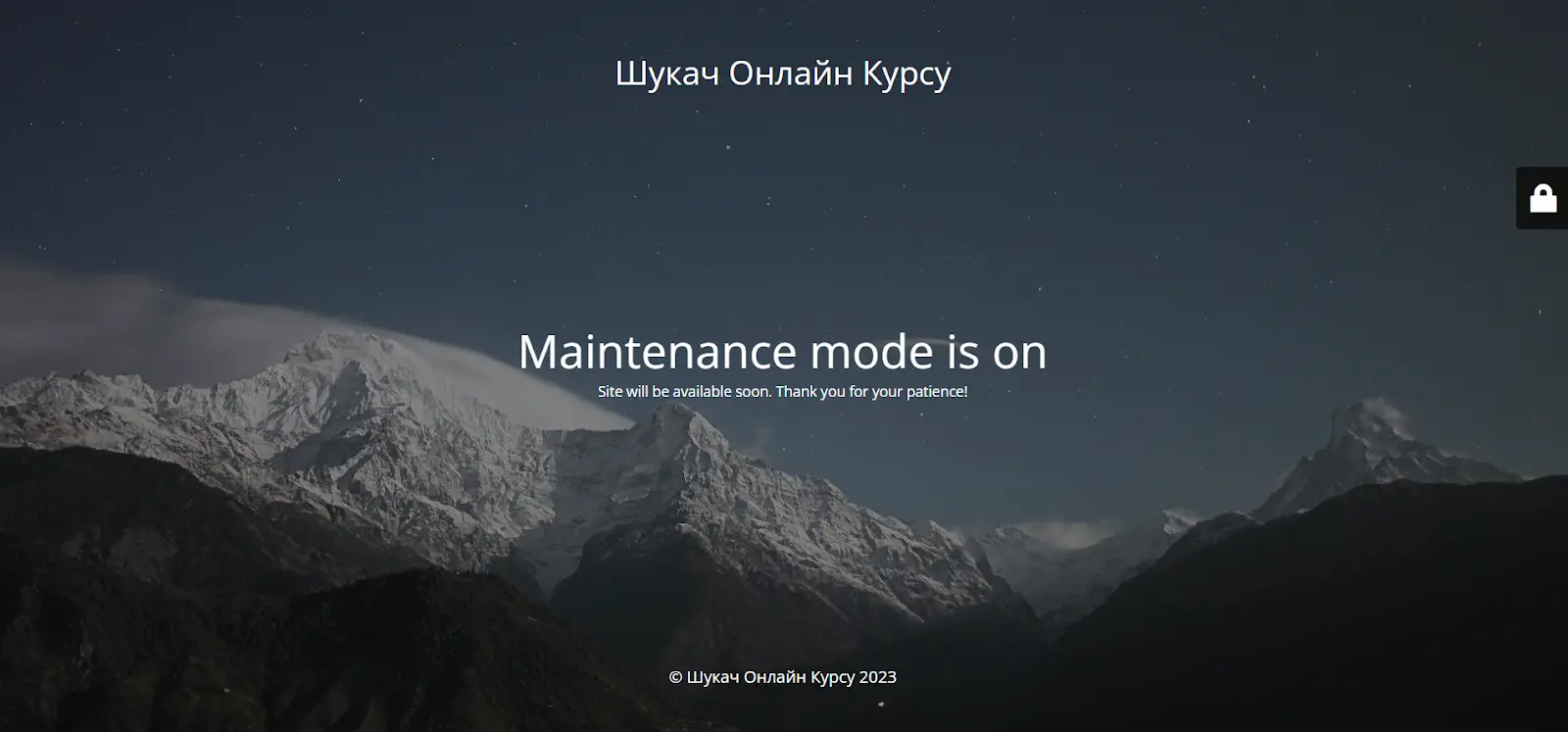

How to close a site on Wordpress from visitors

Maintenance is one of the most popular WordPress plugins that allows you to easily install and configure the plugin. You can set a background image, leave a message and contact information, thanks to which it will be possible not to lose potential customers during the development of the site.

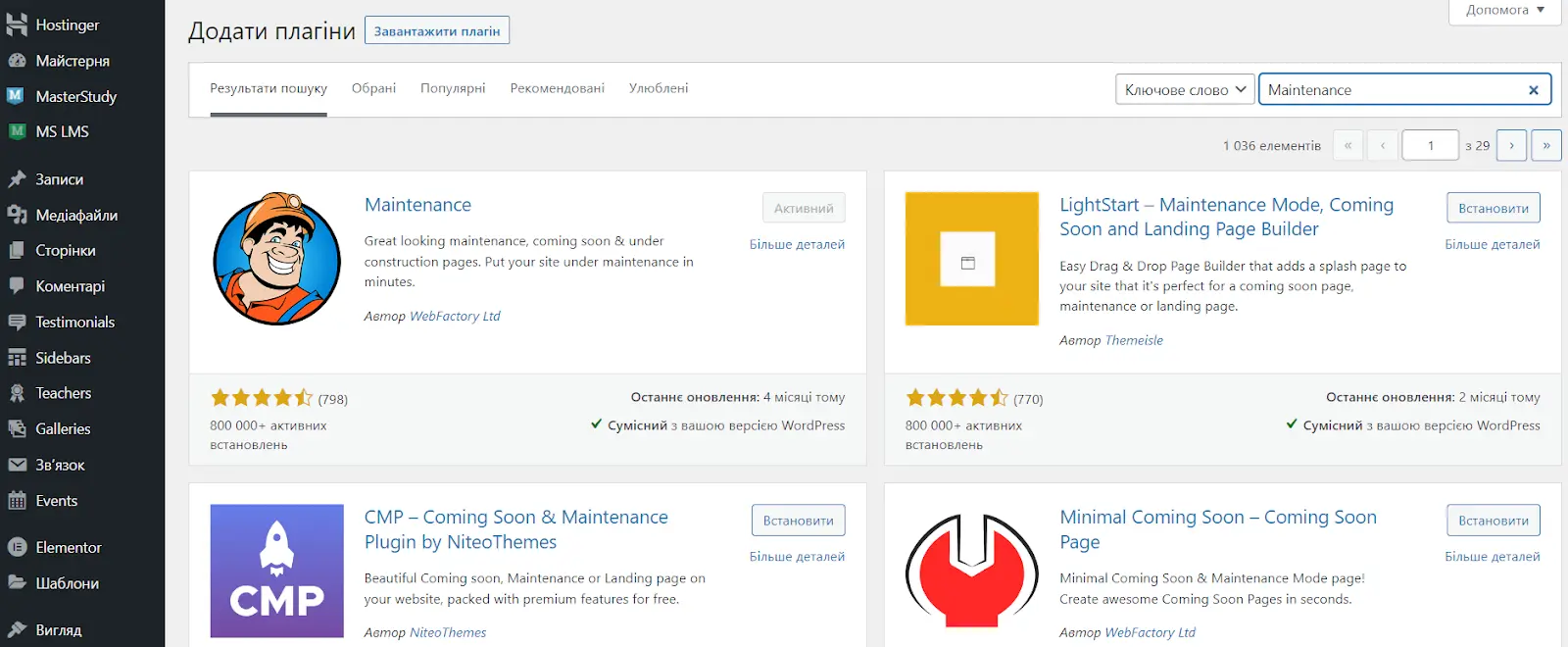

To install the Maintenance plugin, do the following:

In the control panel, go to the "Plugins" tab.

Click Add New.

Click install next to the plugin you want (the first one in the list).

Activate the extension.

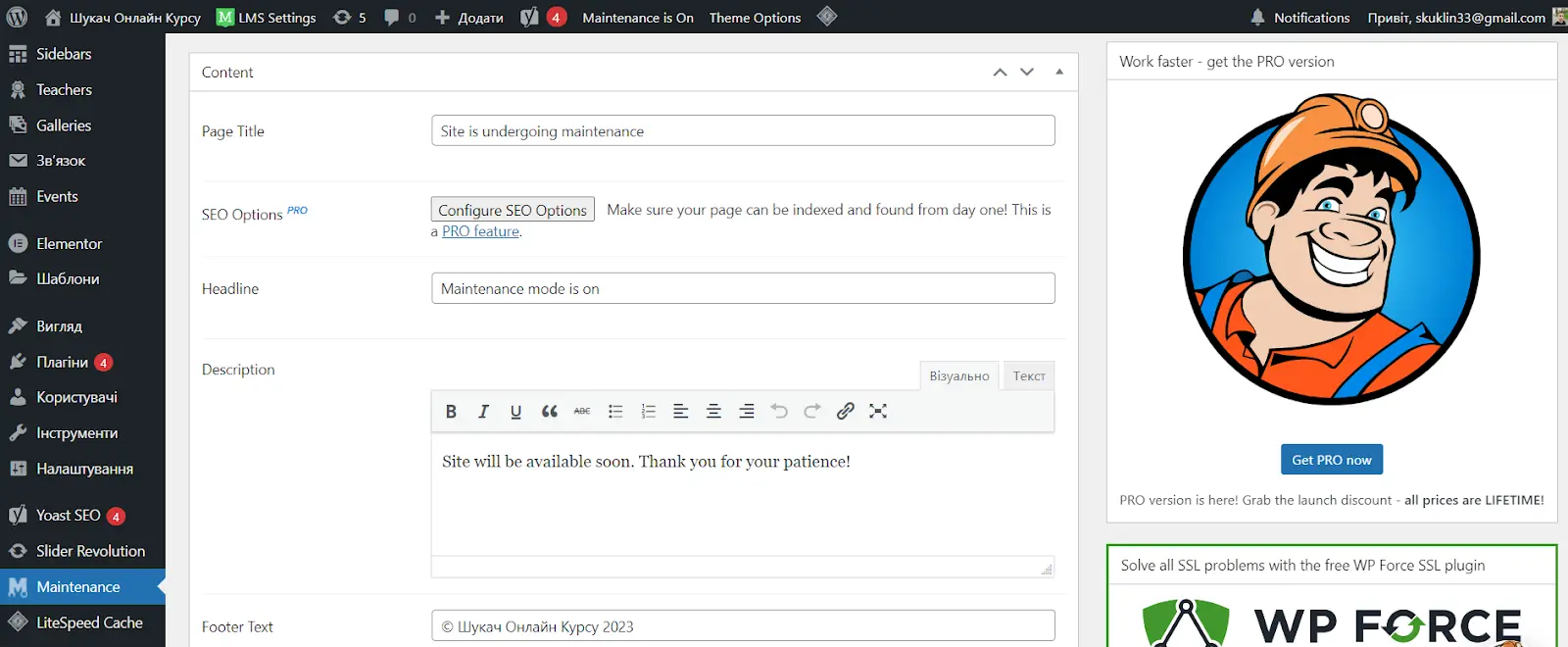

Now the stub is active, it remains only to configure it at your own discretion. Just go to the Maintenance tab, then change the page title, title, description, footer text, add a logo and background image, and even your CSS code. In addition, you can set a Google Analytics ID, receiving information about visitors during the development of a web resource.

The plugin has a PRO version, but the free one is quite enough for basic setup of the main page.

How to hide a site during development using Cityhost tools

Without false modesty, we will say that Cityhost offers a huge number of tools for working with sites. For example, among the options of the control panel is the ability to close the site from crawlers using very simple methods and to install a stub banner on the site itself.

How to close a site from search engines

With the help of control panel tools, you can easily block search bots by user-agent without editing the site code.

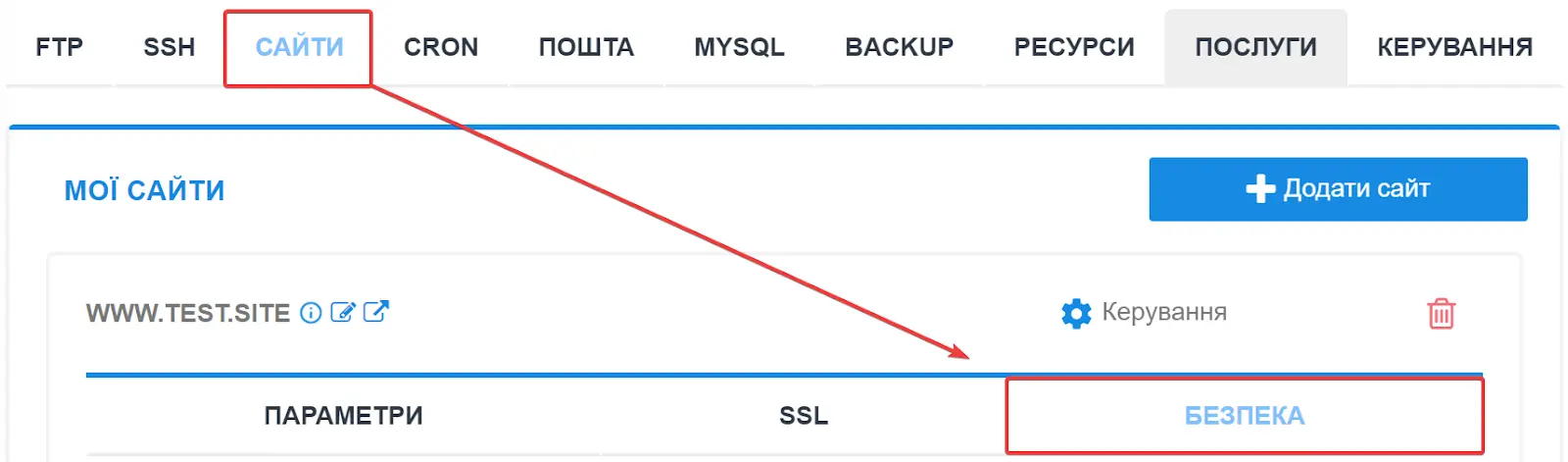

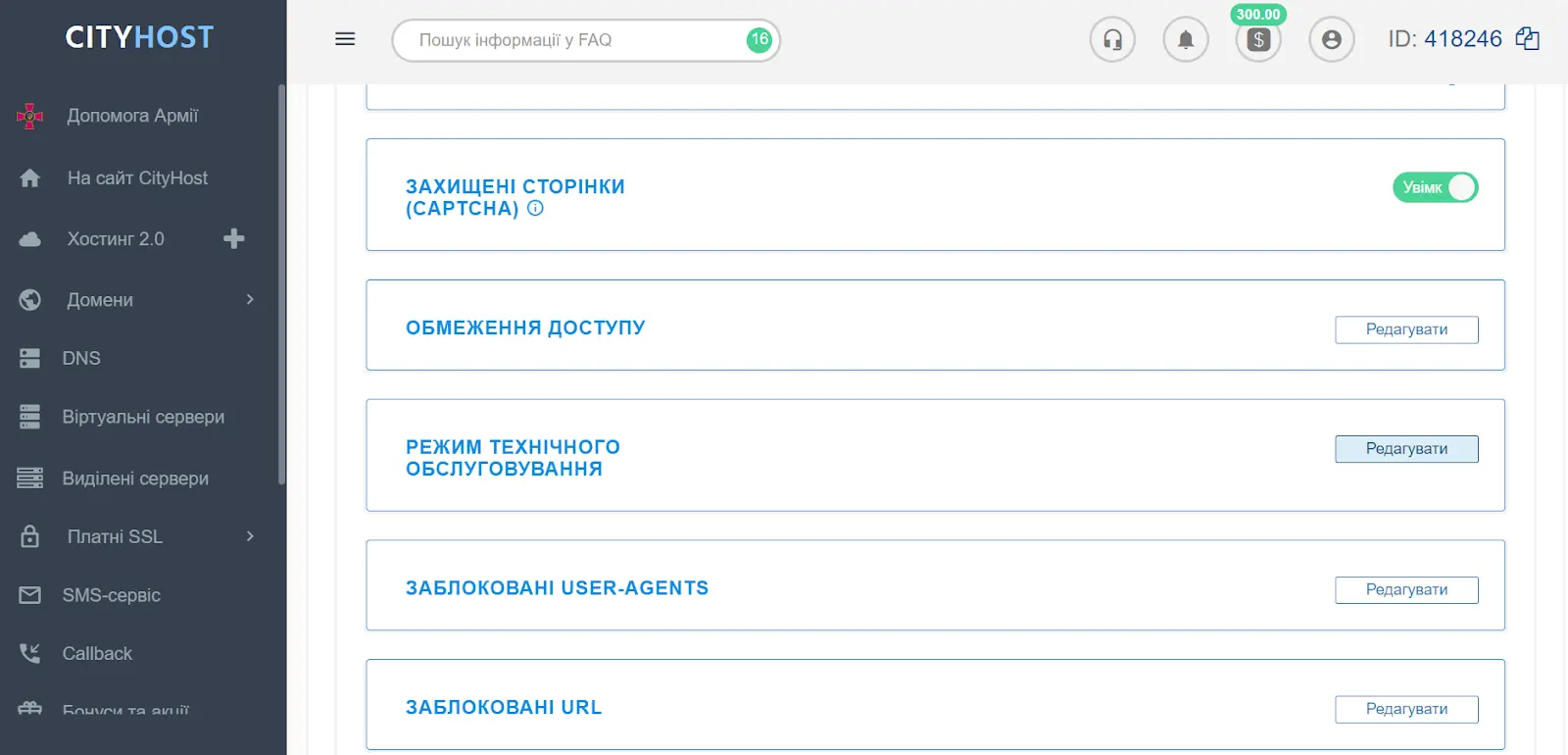

Open the cp.cityhost.ua panel and go to hosting management.

In the settings of the desired project, select "Security".

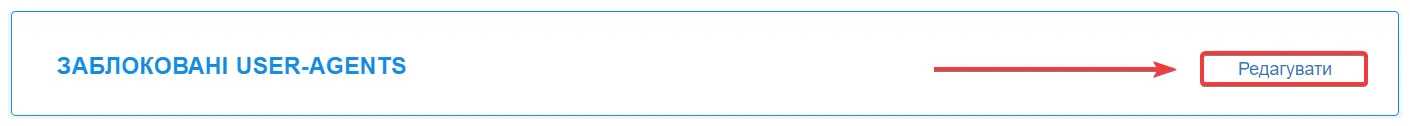

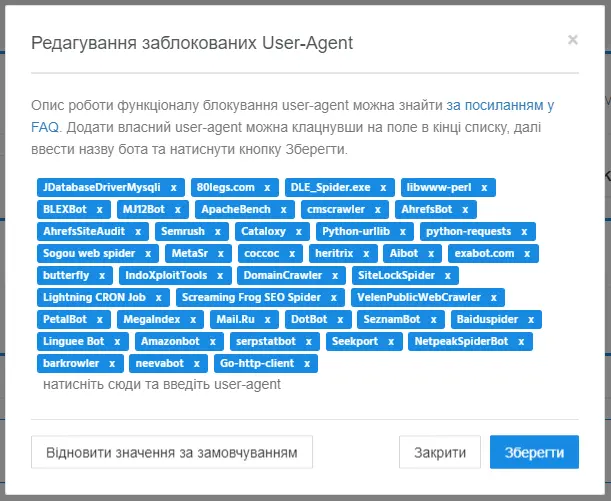

Click Edit next to Blocked User-Agents.

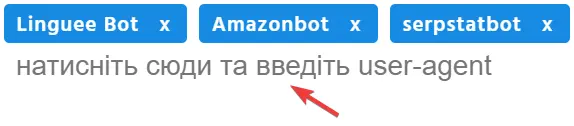

In the new window, enter a list of search engine robots, for example, GoogleBot, YandexBot, BingBot, SlurpBot (Yahoo search).

You can also add any malicious bot whose purpose is to collect statistics for third parties, parse prices for goods and services, detect hidden pages, vulnerabilities, etc. And some malicious bots generally create an excessive load on the web resource, reducing the speed of loading pages.

How to install a banner stub

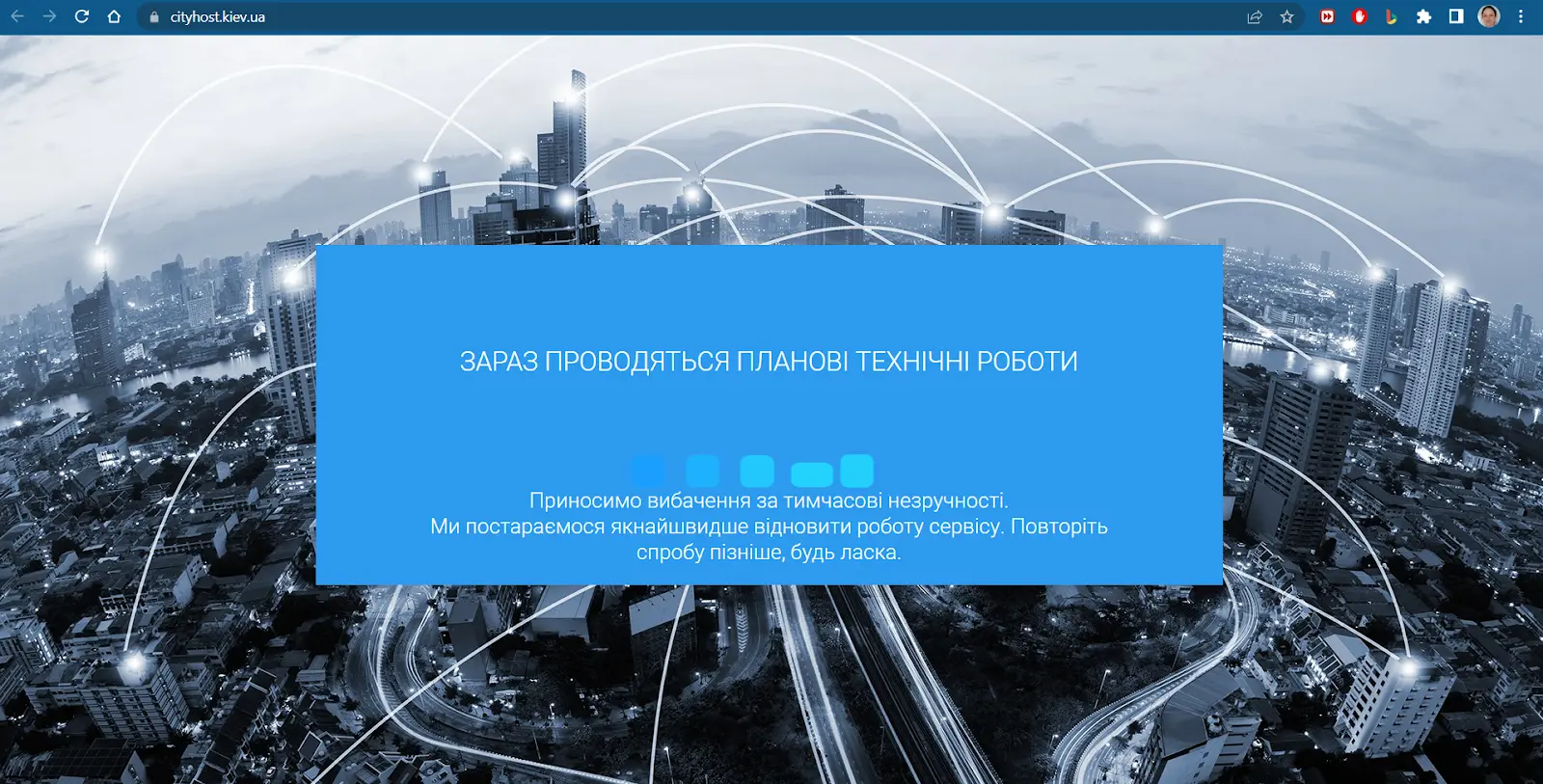

On Cityhost, you can just as easily close the site from visitors by installing a stub banner :

Log in to the control panel of the site on the hosting.

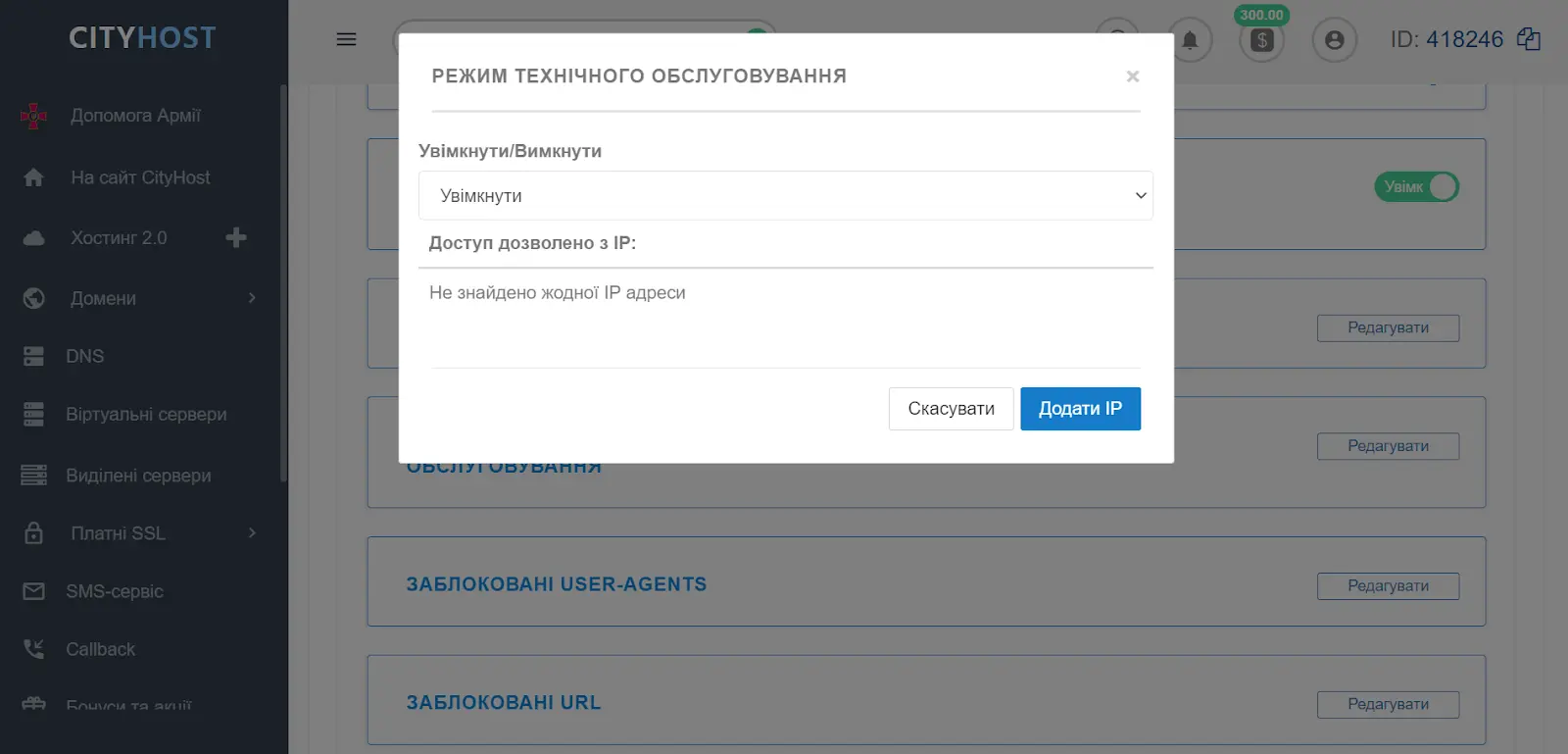

Click Edit next to the Maintenance Mode feature.

Select "Enable" from the list.

You can immediately add the IP addresses of people who are allowed to access the web resource. This is an incredibly useful feature if several people are working on the project at once, for example, a client, a tester, a content manager.

How to find out if you managed to hide the site during development

In the article, we described effective ways to close the site during development from both visitors and crawlers. Plugin is never a problem as it can be installed instantly using the plugin or Cityhost tools. And to check, it is enough to enter the address of the web resource in the search bar and see the result (we recommend entering through a new tab in incognito mode).

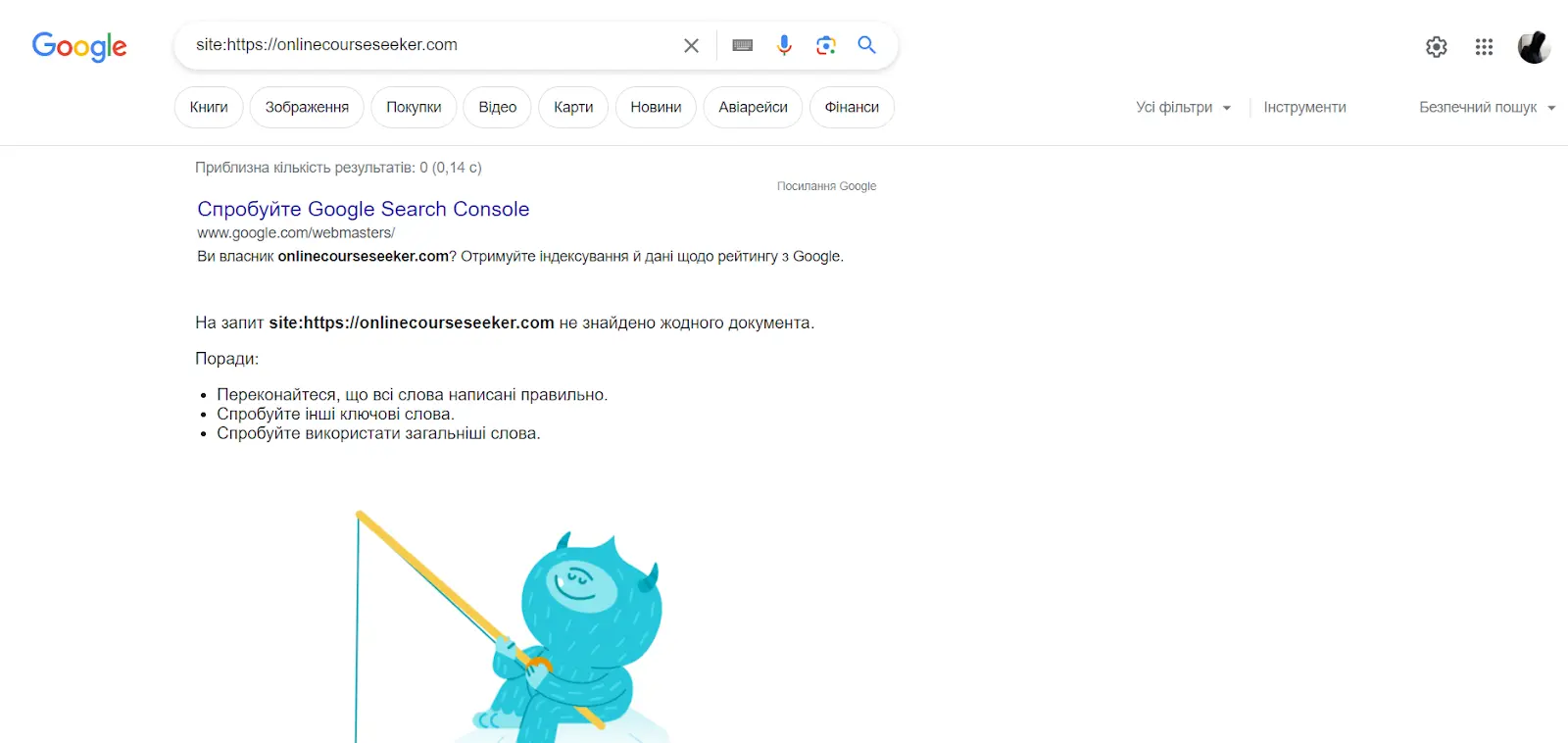

The general process of closing data from search robots is also simple, but the final result depends on the crawlers. In some cases, they may simply ignore the bans by choosing to index the web resource. That is why you need to periodically check whether you managed to close the site from indexing, and you can do this with the help of:

search operator — enter site:https:// + the URL of your resource in the address bar, then see which pages are in the output;

search console - the procedure depends on the specific system. For example, checking the site in the Google edition takes place through Google Search Console. You simply log in to the service, select "URL Check" and insert the address of the web resource;

browser extension - use any application to quickly analyze the site. For example, install and activate RDS Bar, then go to the project and see information about it.

Perfect result: no page of your site is displayed! But this result is ideal, of course, at the time of project development. Once it's ready, allow the crawlers to analyze the pages again and display them in the output. If you need help closing a web resource or making other settings in our control panel, contact the Cityhost support service and our specialists will provide professional advice.