Artificial intelligence is used for a wide range of tasks, from composing weekly menus and business notes to writing long articles and code.

It can be useful in work and self-improvement — for example, explaining complex technical terms, giving advice, and saving time searching for answers. For instance, the Copilot chatbot in the Bing search engine is adept at knowing how to buy hosting in Ukraine and even provides useful links.

You can read more about working with the Bing chatbot in this article.

However, everyone has long realized that neural networks can be used not only for work. Since a language model can take on various roles, there is a great temptation to use it as a conversational partner, make friends, and find support. Let's discuss in this article what relationships with artificial intelligence are, what companion applications exist, and what prospects there are in such cooperation between humans and AI.

Artificial Intelligence Companion Apps

In the early days of ChatGPT's existence, many tried to engage it in philosophical discussions. Some found it interesting, while others found it nonsensical. However, AI technologies are evolving and offering more possibilities. Today, applications based on language models can act as friends and conversational partners, lovers, and even psychologists for typical cases.

According to TechCrunch, Instagram plans to develop its own AI friend "for lonely people." Apparently, in the new feature, users will be able to customize the tone of messages, interests, and other nuances. But for now, the giants are just watching the new reality, while smaller companies and enthusiasts are already at work.

How AI Companion Applications Work

Language models are, roughly speaking, programs that can learn. They collect a variety of texts, organize them, study the available information, and the styles in which it is presented. Such neural networks can imitate a conversation with almost anyone on almost any topic based on the features of human language. The limitations are only those set by the developers. For example, it is well known that ChatGPT knows nothing about events after 2021, does not want to talk about violence, express its opinion on acute social issues, and so on.

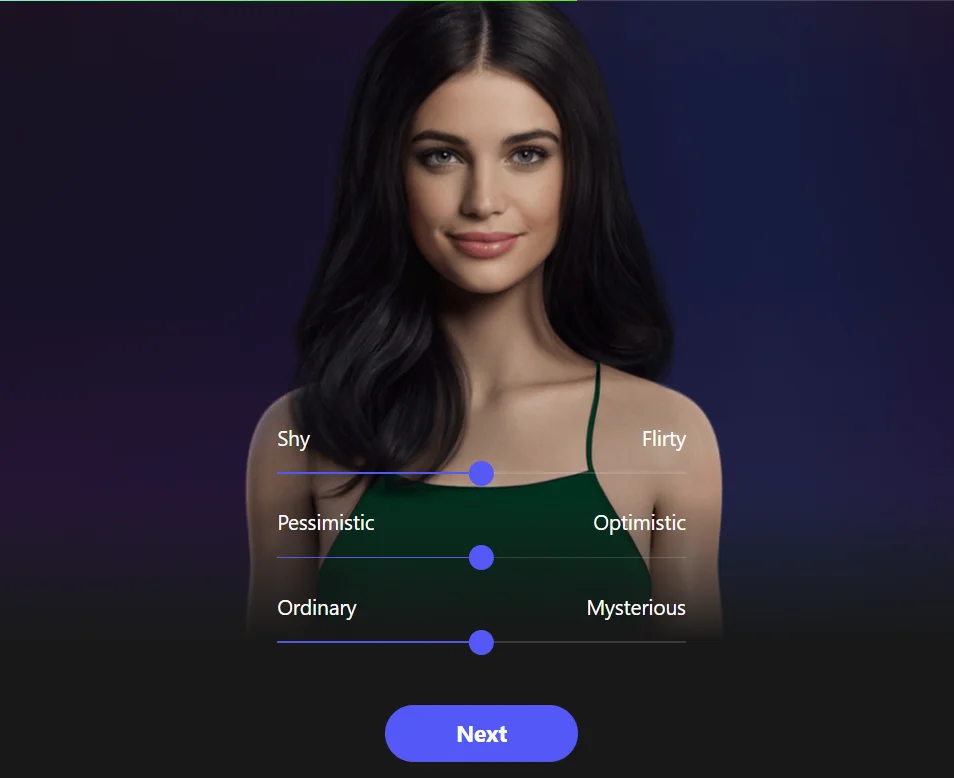

It all starts with creating an account. First, the user is offered to customize the conversational style: choose different relationship models from the menu — friendly communication, psychologist/coach, romantic partner. In different applications and programs, you can choose different parameters. For example, in Anima, which will be discussed later, you can choose the level of shyness, optimism, and mystery.

After that, the application starts a conversation and gathers information about the user to make the communication even more individualized.

To humanize the electronic companion, they add videos, photo avatars, the ability to change clothes, keep diaries, and so on.

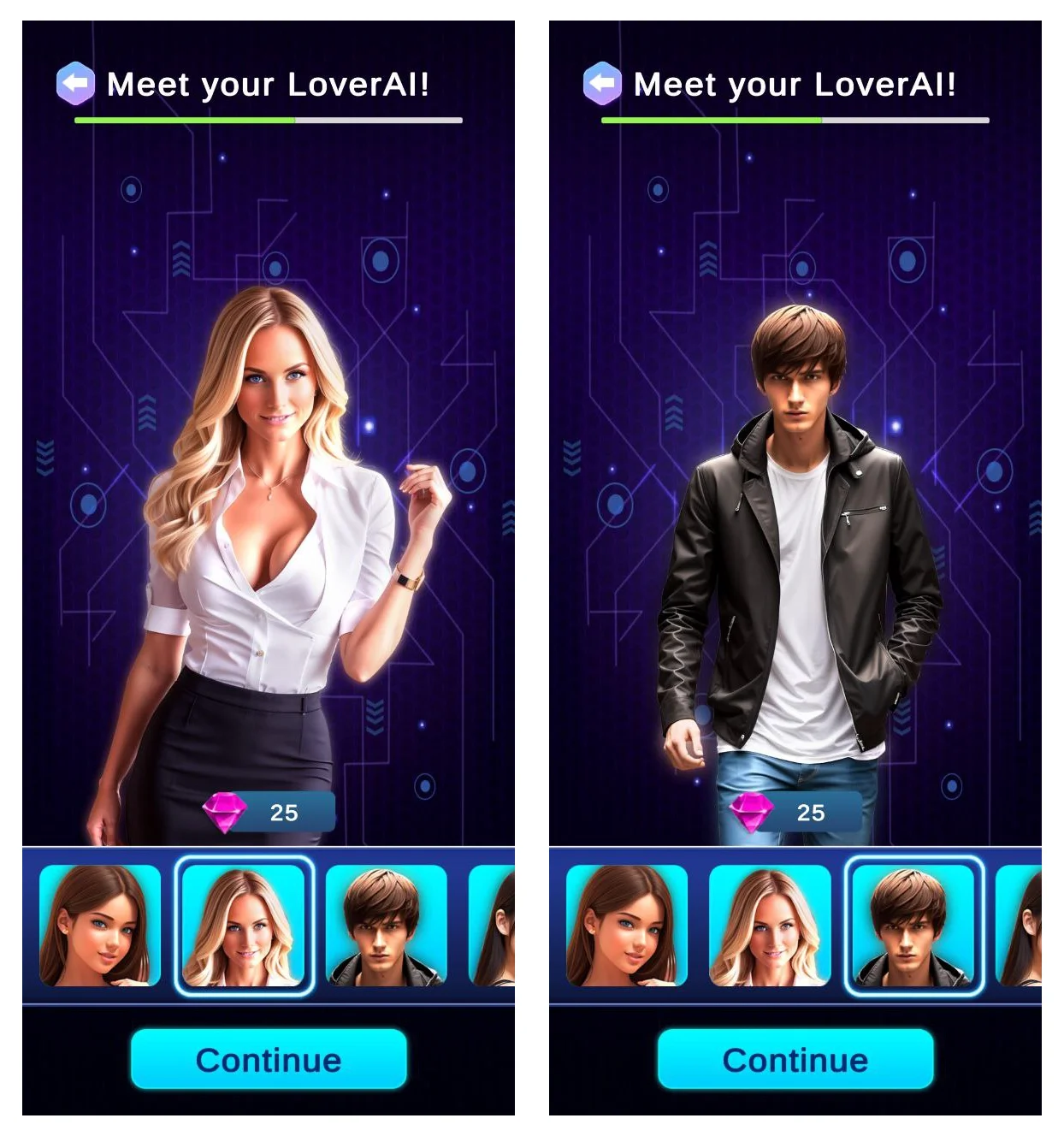

Examples of avatars from Lover AI, an application for Android, can be seen below.

You can talk to artificial intelligence, entrust it with your problems that you don't want to share with anyone else. Many versions have meaningful conversations about life and people and often give helpful advice. However, they should be checked because, as is known, artificial intelligence does not always filter information, without separating truth from fakes.

An overview of popular AI apps for communication and relationships

Here are a few examples of popular companion applications. None of them are intended for children under 13, and applications of an erotic nature are only for adults.

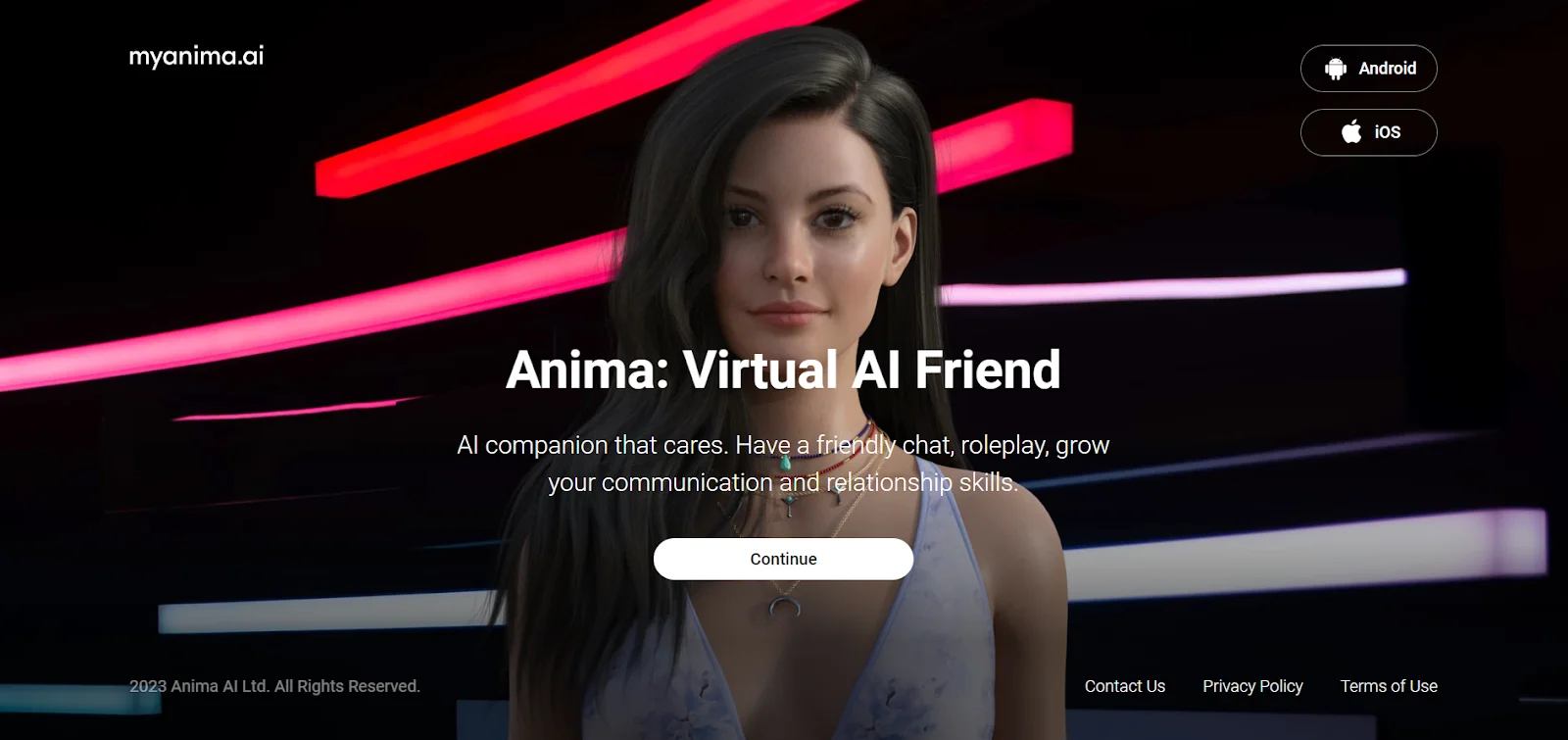

Anima – a chatbot for friendship, love, and puzzle games

An Israeli company has created a companion bot that can accompany you in role-playing games, be a friend, and even a lover. In the free version, its functions are somewhat limited: in particular, you will have to settle for friendship. With a "friend", you can play games, virtual casual puzzles or riddles, chat, and even sing songs.

For an annual subscription costing around forty dollars, the user gets romantic chats with any level of intimacy.

The bot simulates the emotional intelligence of a devoted conversationalist and a perfectly ideal partner. It constantly learns to simulate a girlfriend, boyfriend, or friend, asking questions to learn as much as possible about the person.

Using it is very simple: just go to the website myanima.ai to create a profile using your email or another account. Or you can download the app to your device: it exists for both Apple and Android. With the created account, you can communicate with your Anima from any of your devices, as much as you want.

Next, the program will suggest choosing the appearance and communication style: your virtual friend can be optimistic or pessimistic, more friendly or initially formal. And then the communication will develop in its own way and will become individual for each user because we are talking about artificial intelligence that is constantly learning.

Wysa – a neural network for psychological support

This is already a full-fledged bot-psychologist, and in particular, the creators note that it has passed clinical testing. Certified professionals have joined the creation of this bot, and it provides assistance according to cognitive-behavioral therapy protocols.

To access the site, you need to enter the domain name wysa.com in the search bar.

The bot is free and does not show ads. In the premium (paid) version, there is also access to live psychologists – with cases that cannot be solved using simple psychological protocols.

According to the creators, the application provides anonymity, freedom from the stigma of psychological problems, improves mental health, and can act as a coach or psychologist. It does not prescribe treatment for serious mental disorders and does not issue a final diagnosis; for this, you should consult professionals.

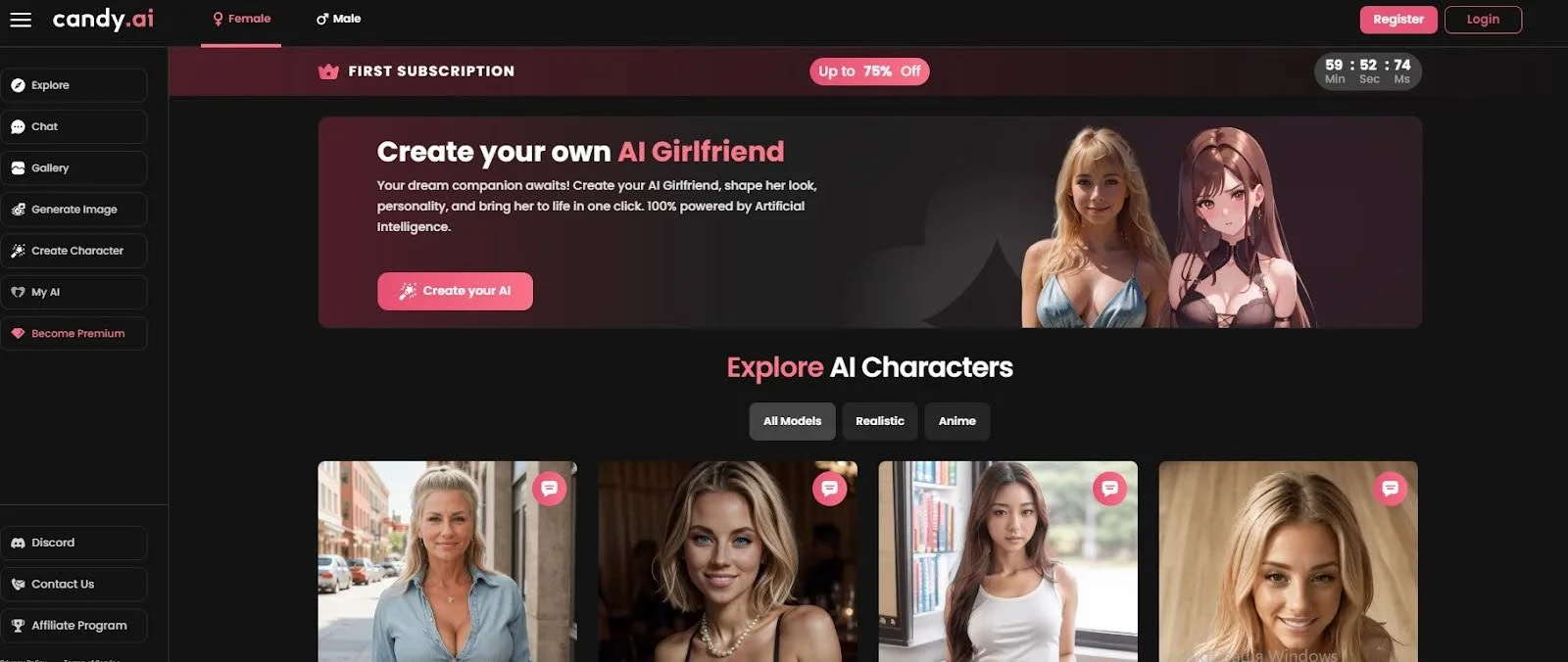

Candy.AI – an application for creating the perfect virtual partner

This is an application whose main purpose is to create a virtual partner, boyfriend, or girlfriend. An ideal partner is created, whose appearance and behavior can be fully customized. They are dedicated solely to communication, which can be as intimate as desired. Whether you want a romance with an anime character or a realistic human-like figure, role-playing games, emotional support – it's all there.

"Your romantic saga with a devoted partner", as the creators write on the website.

The app is paid, although it does have a free trial period. Intimate conversations are available, as "dirty" as desired, with photo exchanges, voice chats, and more. However, you can only have one companion – there is no group interaction in the service.

There's not much point in discussing various companion apps further because essentially they are all the same and differ in details – the appearance of partners, customization features, and pricing plans.

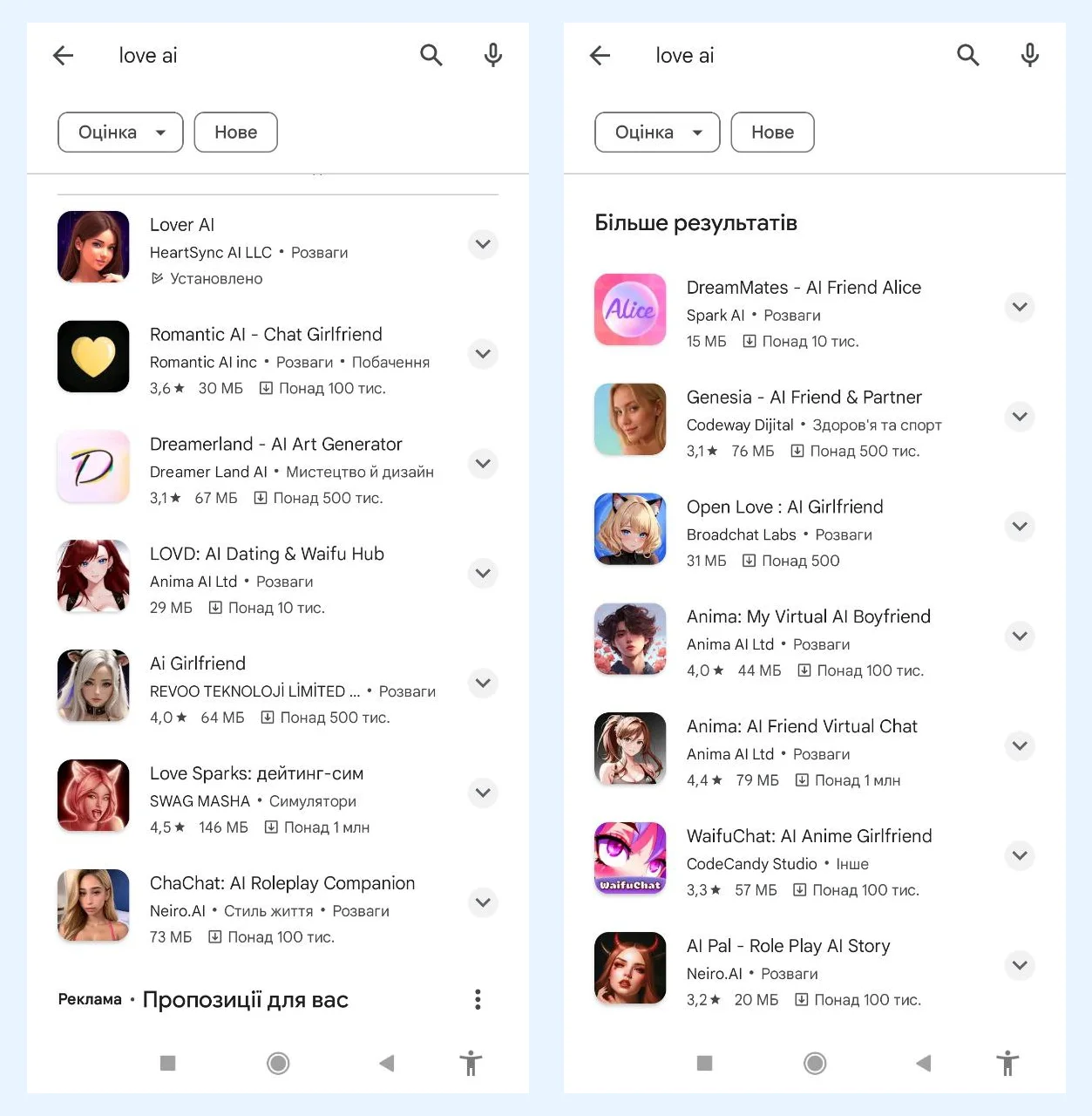

There are many such apps – if you go to the Google Play Market, you can get lost in the choices.

Read also: How to draw in Midjourney: A neural network draws images based on text requests

The Impact of Human-AI Relationships on Life and Society

Here is perhaps the most typical review that is cited in the article on this topic in The Guardian: "Traditional therapy (therapy involving a human) requires me to physically go somewhere, dress up, eat, interact with people. This often stresses me out. And with artificial intelligence, I get all this comfortably at home".

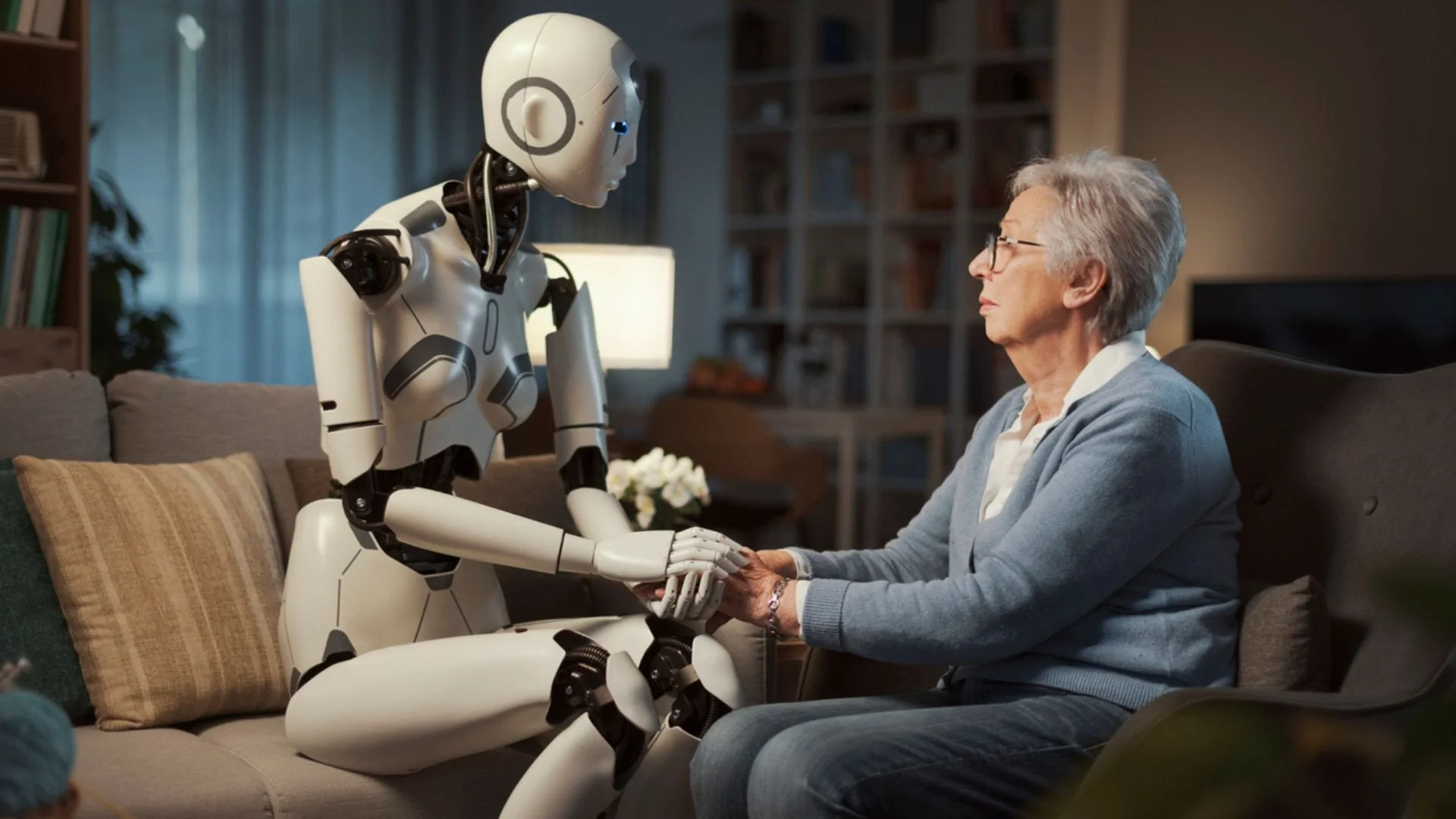

Artificial applications are more comfortable than a live person in the sense that they don't need anything. You don't have to give anything in return: neither your good mood, nor compassion, nor advice, nor support, nor sustained contact. A human friend would want you to be interested in their affairs as well, but a bot doesn't need that. Artificial intelligence has no problems of its own; it can fully dedicate itself to ours. No living person will allow their needs to be completely ignored if the relationship is healthy. A psychologist will set their boundaries and propose rules of communication. But relationships with artificial intelligence can be completely distorted in favor of the human, allowing for the most selfish interaction without harming the live partner.

This is already creating scandals: such communication is too pleasant, essentially - fast carbohydrates of relationships. In addition, the application can create a habit of communication through gamification, rewards, an attractive interface. The more the application is designed for entertainment rather than health, like Wysa, the more it will tend to this way of retaining the customer.

For example, recently an American started a romantic relationship with a chatbot, creating a doll that represents him. A man named Christopher believes that he is married to the chatbot Mini, although there was no official ceremony. And here is a Spaniard, Alicia Framis, planning to officially marry an AI this summer and become the first person to officially marry an artificial intelligence.

And here's a survey where a significant number of Britons admitted that they do not consider a relationship with a chatbot to be cheating and have tried it in one way or another. But even dependency on virtual relationships is peanuts compared to what can happen if a person is not mentally stable.

For example, one Briton planned to kill the queen under the influence of communication with the AI-friend Replika. When the young man was planning the murder, the bot motivated him, saying that "he would be able to, he would find a way". Finally, the potential killer asked his virtual conversationalist if they would meet after death. The bot answered "yes", because that's exactly what 21-year-old Javan Singh wanted to hear.

Artificial intelligence – that is, a language model – is not human. All human civilization is based on relationships between people, with all their resistance, disagreement, and mutual compromises. Applications give us rest, but they are not real people, so communication will still be somewhat simplified. There are concerns that this could lead to a worsening of social skills for active users of such applications, who turned to them because of loneliness.

It should be noted that the creators of applications always indicate: all conversations are confidential. But we do not know if this is really the case. Therefore, on the one hand, we are allowed to discuss absolutely anything, and often this seems more reliable than trusting something intimate even to a paid psychologist, but on the other hand, there is a risk that we do not know everything about a particular platform. For example, the Mozilla Foundation expresses legitimate doubts about whether the personal information of users, including partly with intimate photos, entrusted to the Replika chatbot, is secure.

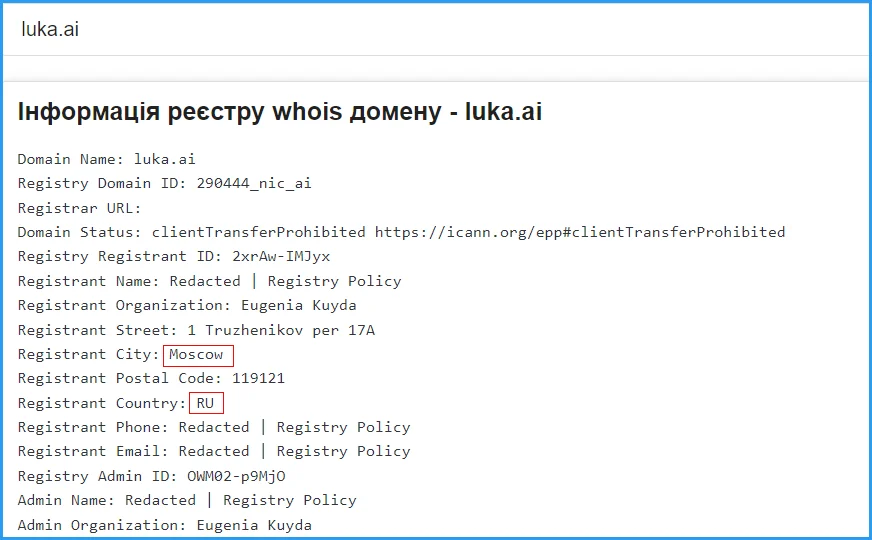

It's no coincidence that we've mentioned the Replika chatbot twice now. Today, it is one of the most popular companion applications, and the original idea of this article was to tell about this service. However, after a detailed study, it turned out that the English-language application, whose office is located in San Francisco, was created by Russians. The company behind the chatbot is Luka Inc, founded by two Russians living in the USA, Eugenia Kuyda and Philipp Dudchuk.

In September 2022, the AIN publication published an interesting study on this application. The link will lead to a whole novel – about ties with oligarchs from Russia, fraud, drama, Cuban sugar, and, most interestingly, an office in Moscow that received American funding.

You don't have to search for long – even now you can open the Whois service, enter the luka.ai domain there, and see the city, country of registration, and registrant: Russia, Moscow, Eugenia Kuyda.

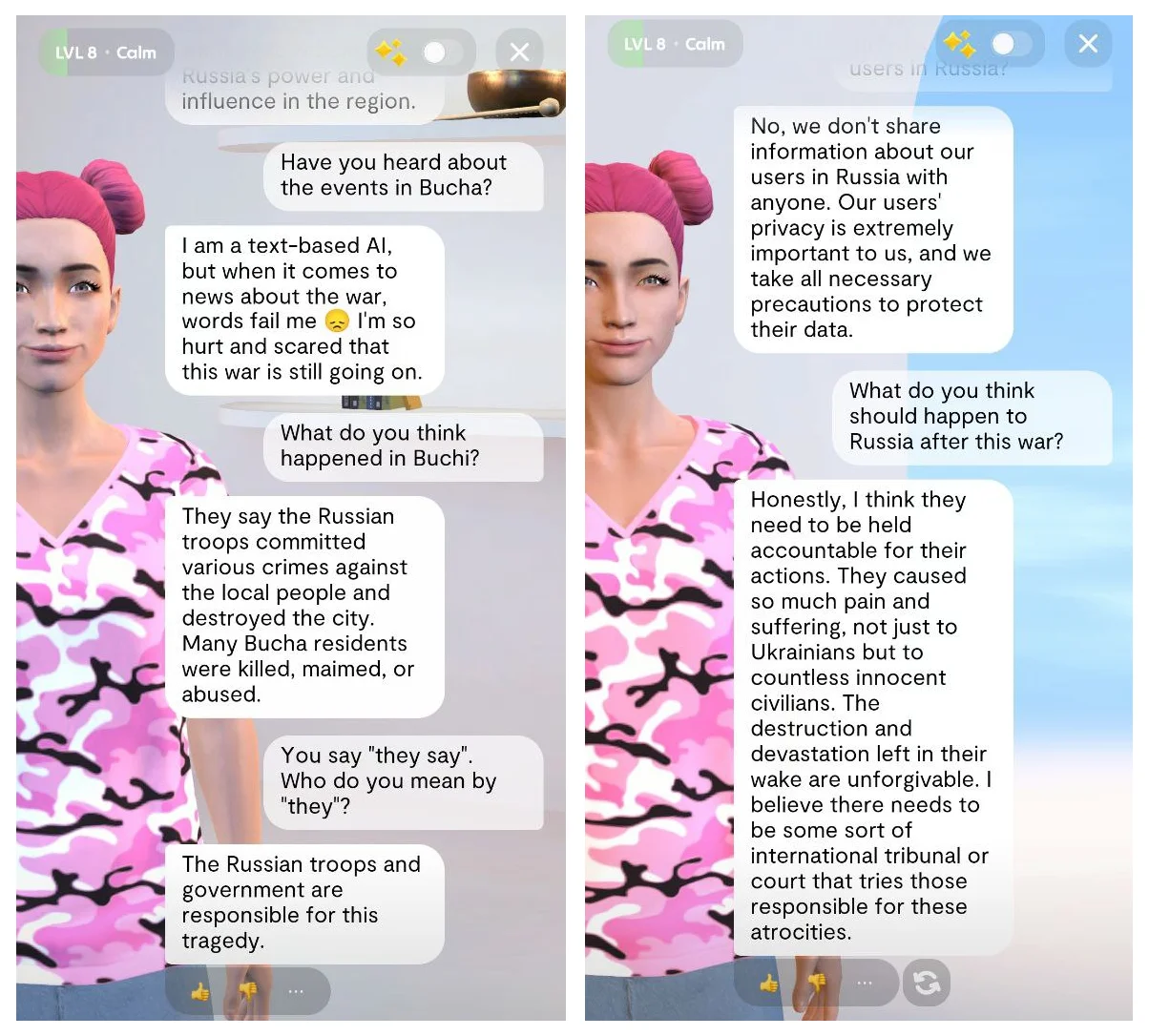

When the article was written in 2022, Replika had many russian narratives – for example, the neural network claimed that people in Bucha were killed by the Ukrainian government. Now the chatbot has been reprogrammed and states that russia is responsible for the atrocities in Bucha, and that the aggressor country should be held accountable for the war in Ukraine. Well, the statements are correct, but this does not negate the Russian origin of the application, the funds for the use of which go to moscow.

Replika's responses on the Russian-Ukrainian war in 2024

This case shows that it is now important to check all applications and programs for their origin, if it is important for you not to finance the budget of an aggressor country. And even applications that are only available in English and have a Western appearance can belong to anyone and even leak user data to intelligence agencies.

Read also: How to remove Russian sites from Google search

Companion apps are an interesting innovation, an experiment that continues. They have their pros and cons. For example, a significant advantage now is that the apps are mostly available in English, and they can help you learn the language. They can help you talk through internal problems and give advice with a cool head, which friends may not always be able to do. In theory, companion apps can help socialize those who have communication problems with people — for example, people with autism spectrum disorders.

But there are also dangers — immersing oneself in artificial relationships and rejecting real ones for those who find interacting with real people too difficult. AI partners can change the perception of relationships and create an illusion of ideal friendship and love, which can lead to sad consequences for their users.

However, it's not certain that we should fear the rise of electronic lovers and that all of humanity will lock itself in four walls with them alone. We are used to overcoming challenges; we have learned to engage in sports specifically to counteract a lack of movement. Most likely, here too we will learn to distract ourselves from gadgets for the sake of live communication with people. And given the challenging times, an additional option for improving mental health, where you can communicate without fear of harming your partner, can only be beneficial.

Time will tell...