Who are bots — is software aimed at collecting and analyzing information on the Internet, as well as performing repetitive actions that a user might perform. For example, bots can parse data on websites, click on advertisements, fill out feedback forms, and give programmed answers in chats.

The term "bot" is widely used, it refers to both automated responses on social networks and websites (chat bots), and people who manage fictitious accounts on social networks. We will talk about those bots that enter the site and perform certain actions there without the knowledge of the owner.

Due to the higher speed, the bot can perform hundreds of times more operations than a human in the same time. In addition, using a bot is cheaper than hiring employees — for example, to collect data. Therefore, bots are at the heart of the automation of many processes in the network.

Almost half of the traffic on the Internet is generated by bots. According to Statista research, malicious bots generated 27.7% of traffic in 2021, while useful bots accounted for only 14.6%. People in this distribution got 57.7% of the traffic.

Each bot, entering the site, creates a certain load. And if you have to put up with traffic from useful bots, then malicious bots cause several problems at once, depending on the purpose of their existence. In addition to loading the site, this includes unauthorized data collection, DDoS attacks, and bypassing protection systems.

Regardless of where your site is hosted, a dedicated server or a VDS , bots take up resources and hurt your load times.

Let's understand what harmful and useful bots are, and what they are like.

What are malicious bots and how do they differ from useful ones

Beneficial and malicious bots are technically very similar — the difference lies in the purpose of their use. Depending on the goals, they are divided into narrower categories.

Harmful bots:

Spy bots – scan sites for poorly secured user contact information to create a database for spam and other unauthorized activities. If you started receiving suspicious emails, then one of the scenario options is: you left the address on a poorly protected site, a bot found it and included it in the database.

Clickbots - from the name you can guess that they are engaged in clicking, or rather, following links. They create the most trouble in the advertising sector at Google, draining the budget on pay-per-click ads. This method is often used by competitors.

Hackers choose access passwords to the accounts of administrators or users on the site.

Spammer bots fill out feedback forms and leave advertising comments with links that often lead to phishing sites.

Downloaders download files - they are used to create the visibility of requested content.

DDoS bots are often user devices infected with malware. Such bots send a lot of requests to the site in order to "put" it.

Useful bots:

Search bots (crawlers) are robots of search engines that scan the pages of sites in order to include them in the index, rank them and issue them in the SERP.

SEO analytics service bots are tools of Ahrefs, Serpstat, Semrush, and other analogs that monitor certain indicators on sites that are required for the work of SEO specialists (traffic, backlinks, keys by which the site is ranked).

Scanners for determining the uniqueness of content - they are used by copywriters to check texts. Anti-plagiarism checks all articles available on the network in order to compare and identify copied texts.

In this classification, the most common bots are collected - in fact, there are many more of them. In addition, this classification is quite conditional. The same bot can be used for different purposes — for example, aggressive data parsing can cause a site to slow down and even stop working. But the same parsing is useful for sites, because it is part of the work of search engines, price comparison services and many other platforms.

How to monitor the activity of bots on the site

The appearance of bots can be monitored by several criteria:

Traffic spikes - If you have a sudden "spiking" of traffic that is not related to marketing promotions, this may indicate the activity of bots.

An increase in the load on the server can also indicate the activity of bots.

Inquiries from regions where you do not do business.

High bounce rate: Bots generate requests but don't spend enough time on the site. Bot IP addresses will have a 100% bounce rate.

But the best activity of bots on the site can be seen by analyzing the logs, which are records of the sessions of visiting the site, which indicate a number of data about the visitor. In particular, this is such an indicator as user-agent — software agents, by the name of which you can determine the device or program that accessed the site. Browsers, search robots, mobile applications, etc. have user agents.

Each bot has its own name, for example, Google user agent is called Googlebot, Ahrefs parser is AhrefsBot, SE Ranking is SE Ranking bot.

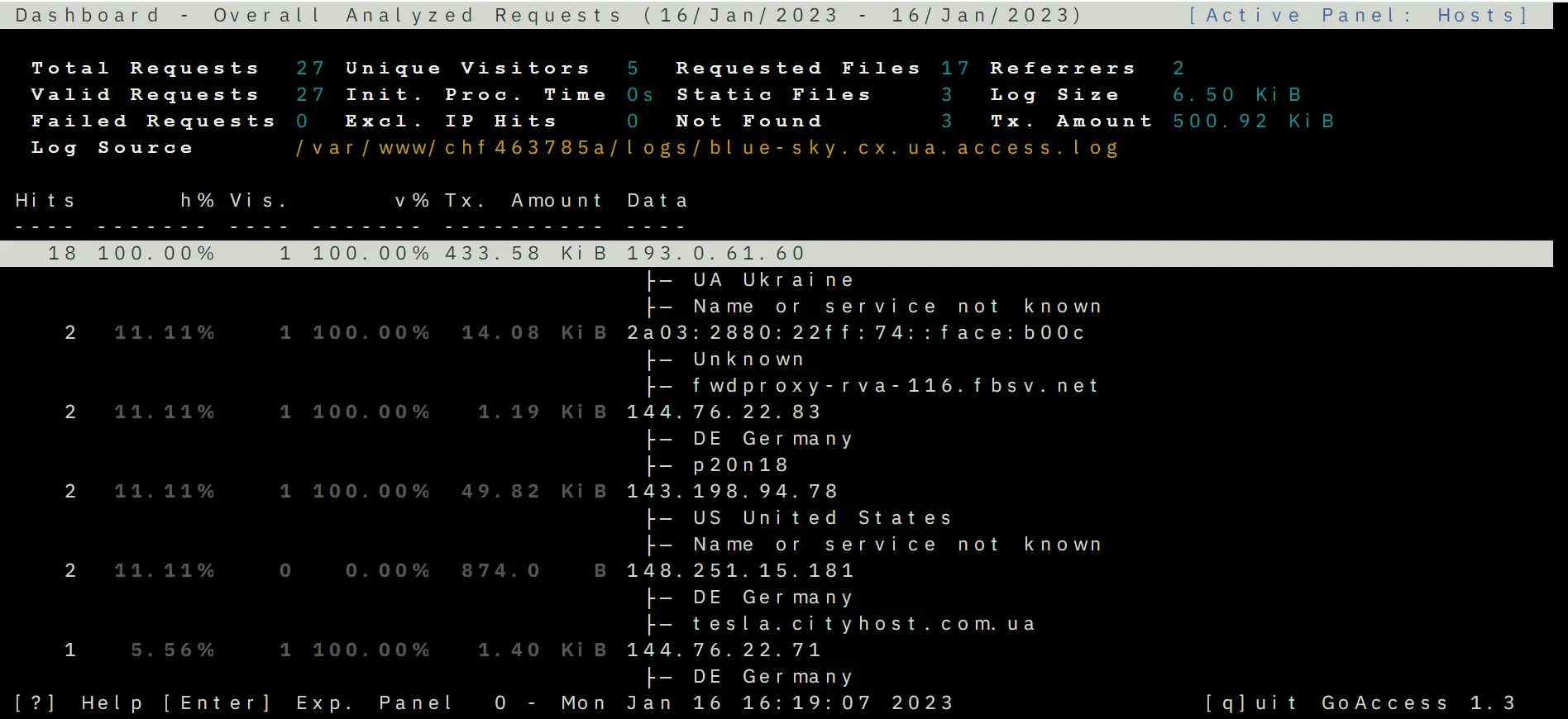

To view the logs, use the special GoAccess tool from Cityhost, which sorts requests and provides statistics. It can be found under Hosting => Management => SSH => Web SSH: Open. Next, we recommend using the instructions on how to analyze logs using GoAccess .

With its help, you can see the country from which the site was accessed, IP address, user-agent and other parameters.

If the bot does not have a user agent (written as Unknown robot), then, most likely, it is the work of an amateur or a lone hacker who can be banned by IP address. Read below how to do it.

Site protection from malicious bots

One of the problems with protecting against bots is that they mimic user actions, and the server does not distinguish between requests from bots and from people. There is no magic button "Protect against bots on the site" that will eliminate difficulties with one movement. There is a separate bot site protection for each class of bots, and some uninvited guests have to be manually filtered.

It is necessary to close access to the site not only to frankly harmful bots, but also to those that are not useful, but create an unnecessary load. For example, when the site is scanned by an analytics service or a search robot that is unnecessary for your work.

Webmasters face a number of complex issues that cannot always be resolved — for example, the same protection against parsing. Automatic methods cannot distinguish between good and bad parsing, and in fact, this method is not illegal. Anyone can view and collect data from sites, that's what they were created for. When the user flips through the pages and writes out the prices, this is also a data collection process, just the parser does it faster. Such subtleties complicate the process of site protection and make it continuous. You need to constantly monitor traffic and analyze logs to see login sessions of malicious bots and manually block them.

Since bots are often used for DDoS, we recommend that you read the article on how to protect yourself from DDoS attacks .

Methods of blocking malicious bots

Here we will talk about the tools that are mostly provided to Cityhost customers. If you use the services of other providers, the methods may be slightly different.

Blocking traffic sources

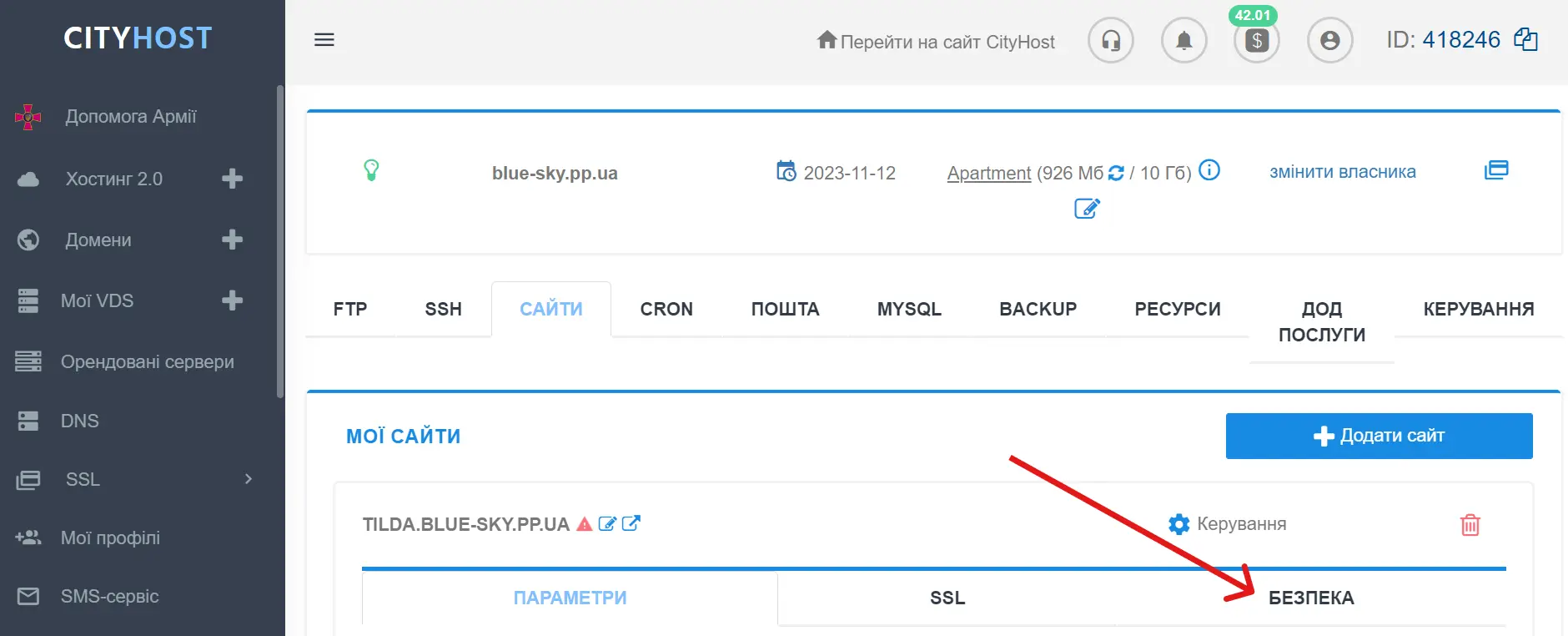

In the Cityhost service control panel, you can manually block suspicious traffic sources by IP address and URL. You can find blocking tools in the section Hosting => Management => Security.

It is worth mentioning separately about user-agent blocking — this tool already has a built-in list of widespread malicious bots. The user can enable or disable the tool, as well as uncheck certain user agents or select all. If you want to manually block specific user agents, there is a separate algorithm for this, described below in the article.

Additionally, in the "Security" section, you can set a password for certain directories so that only those who know this password have access to important site folders in the file manager.

Captcha for logging into the administrator account

In the same section, you will find a captcha created by Cityhost - it protects the login to the admin on our site and in the CMS installed on the hosting . This is a very simple and unobtrusive filter, in which you only need to check the "I'm not a robot" checkbox (no complicated procedures). But the bot can no longer perform even such a simple action.

Blocking bots in .htaccess

.htaccess is a file in the root folder of the site that contains certain settings for the operation of the web resource (redirect, creating error pages, blocking access to the site and individual files). In this file, you can write bans for user-agents of malicious bots, which will not be able to scan the site in the future.

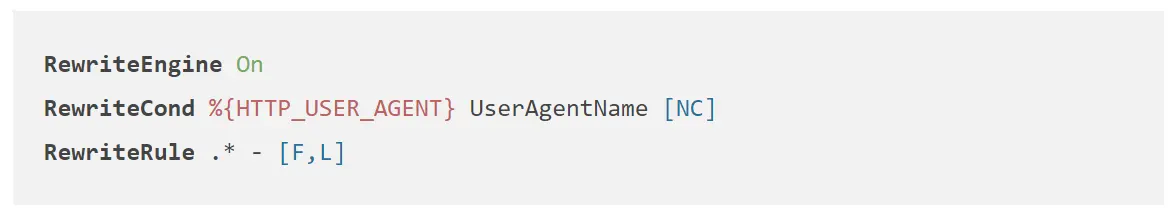

To do this, you need to open .htaccess in the file manager and write the following rules in it, where UserAgentName should be replaced with a real user agent.

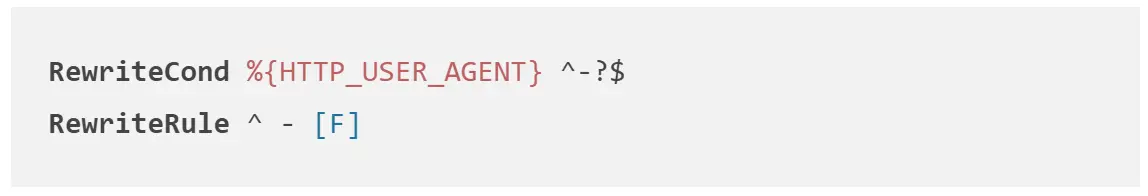

You can also block requests from agents who don't have a name.

Protection against spam bots

Bots that leave comments on the site and fill out feedback forms need special attention. The following methods are used to protect against them:

A captcha in the form - usually it looks like a check box "I'm not a robot" and does not complicate the user's interaction with the site.

Mail verification - sending an email to the entered address so that the user follows the link and confirms it. Bots generate hundreds of non-existent addresses, so this method allows you to leave comments or fill out forms only for real e-mails.

Bot traps are invisible fields that users won't fill out simply because they won't see them. Bots fill in all the fields, so they can be detected and filtered in this way.

How to manage the activity of useful bots

Helpful bots sometimes also don't know how to measure and send too many requests, overloading the site. But they can be "slowed down". Each bot has its own settings, most of which can be found in the instructions on the official website. For example, here are pages dedicated to Google, Ahrefs and Serpstat crawlers:

You can find such instructions yourself for all useful bots and limit the duration and frequency of their crawls so that they do not overload the site.

Finally, we note that all types of malicious bots can be blocked using the Cloudflare service, about which we wrote a separate article . Check it out and use this platform for extra protection.

How to analyze traffic from bots on the site

You can see whether the load on the site has decreased after implementing any of the protection methods in your hosting admin panel: Hosting 2.0 => Management => Resources => sections "General information", CPU, Mysql. But it's worth understanding that you won't see the changes right away - you need at least a few hours to pass.

To protect the site from unnecessary requests, we recommend regularly monitoring load indicators, logs and traffic in order to block bots in time.

Sources used in the material: