- How Website Indexing Works

- How to Check If a Page Is Indexed by Google

- How to Check Indexing with Ahrefs

- How to Speed Up Website Indexing

- How to Avoid Indexing Problems

- When to Block a Website from Indexing

- Why a Page Is Not Indexed

- How Hosting Affects Website Indexing

Indexing is the foundation of a website’s visibility on Google. Without it, even the best content won’t appear in search results, and visitors simply won’t find your pages. Simply put, indexing is when search engines “get acquainted” with your site, read its pages, and decide whether to show them to users.

Everything you publish — news, blog posts, products, or service pages — must be indexed. Only after that does your site start receiving organic traffic. That’s why monitoring indexing is a part of everyday SEO routine — without it, growth is impossible.

How Website Indexing Works

Indexing is a complex yet logical process in which search engines gradually process a website’s pages to make them accessible in search results.

The indexing process consists of several stages:

- Crawling. Googlebot follows links, discovering new pages and checking for updates on existing ones.

- Rendering. The bot reads the code, text, images, meta tags, and headings to determine what the page is about and for which queries it may be useful.

- Indexing. All information about the page is stored in Google’s vast database, where it can be found for relevant searches.

The speed of indexing depends on several technical factors:

- quality of hosting and server stability;

- page loading speed;

- presence of a sitemap (sitemap.xml);

- internal linking that creates logical pathways between pages for the bot;

- content uniqueness — duplicated text is indexed more slowly or may not be added at all.

Google’s algorithms also take into account how frequently a site is updated. If a resource regularly publishes new materials, the bot visits it more often and checks for changes almost in real time. This is why blogs, online media outlets, or e-commerce sites appear in search results faster than static corporate websites.

For example, a company that actively maintains a blog and adds several articles each week may see its publications appear in search results within one to two days. Meanwhile, a site that updates only once every few months might have to wait a week or more for indexing. Consistent content updates signal to Google that the site is “alive” and deserves attention.

How to Check If a Page Is Indexed by Google

Many website owners wonder how to make sure their content has actually appeared in search results.

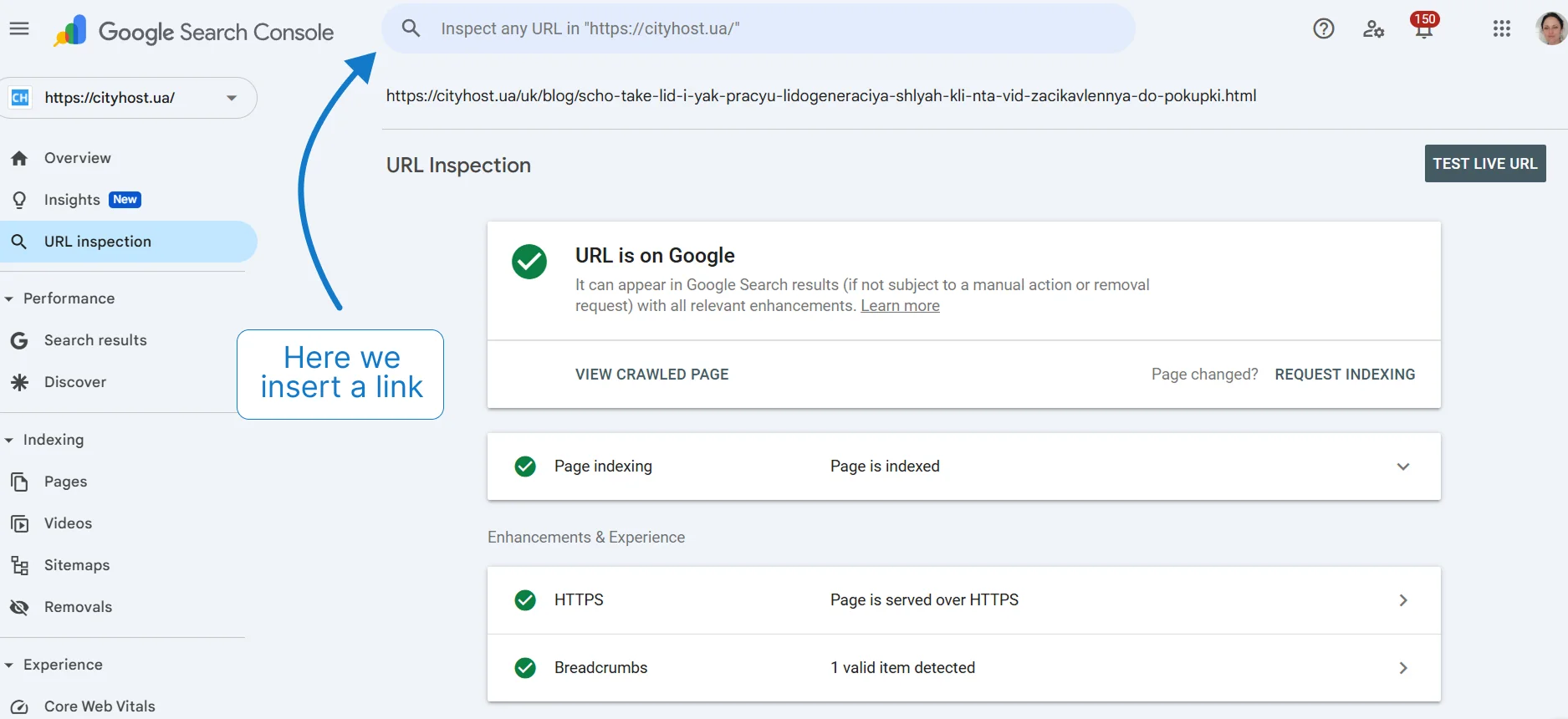

In Google Search Console, you can check the indexing status in the URL Inspection section. The system shows when the page was last crawled, whether it was added to the index, and whether any technical errors were found. For large websites, it’s more convenient to use professional tools such as Ahrefs, Serpstat, or SE Ranking, which allow you to check indexing in bulk.

How to Check Indexing with Ahrefs

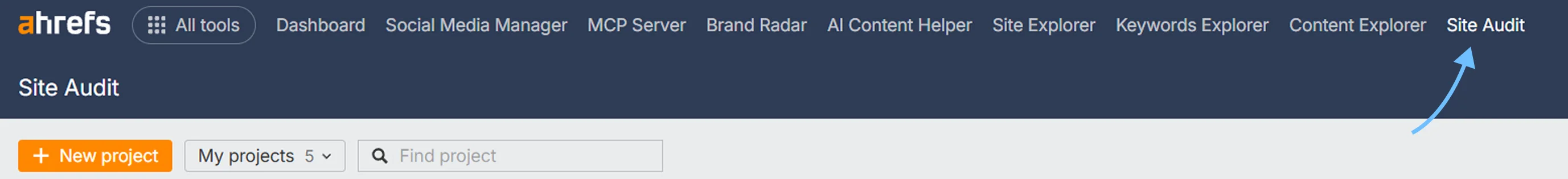

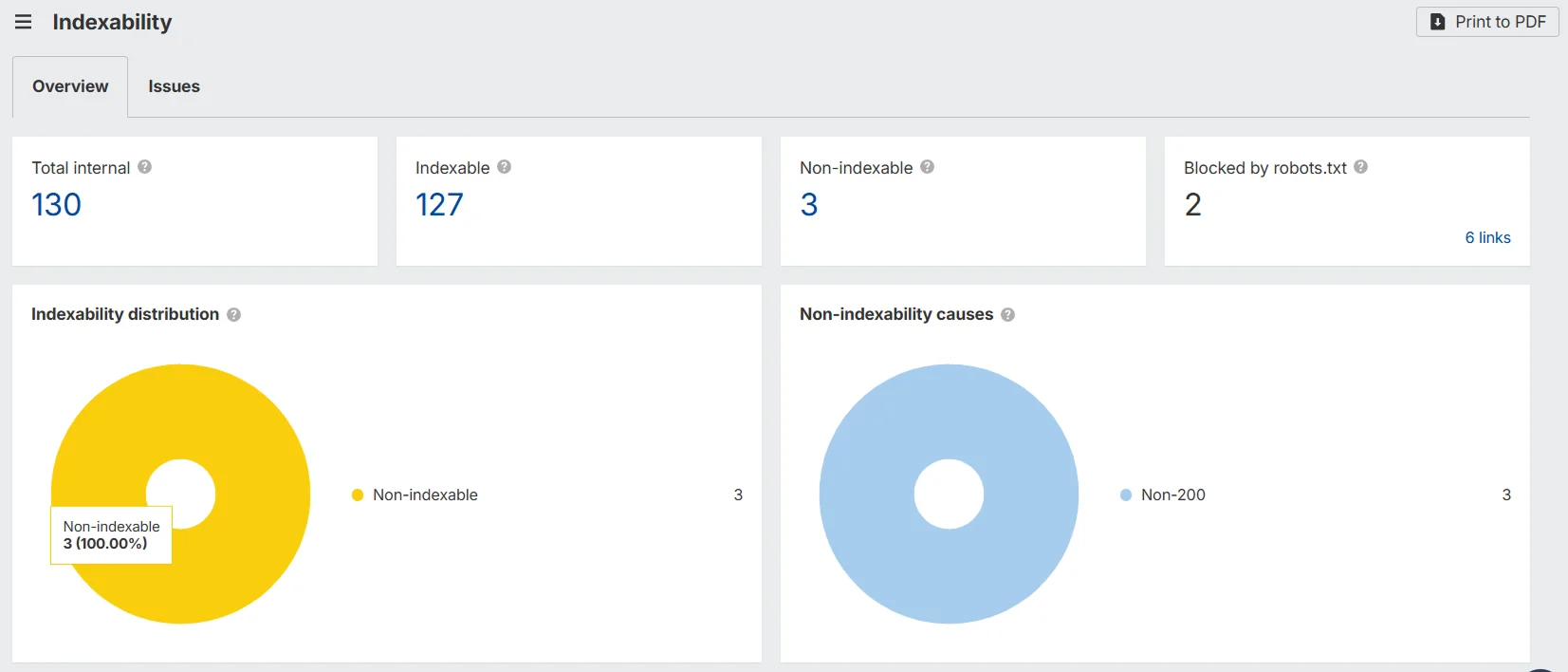

You can check the technical indexability of your website in Ahrefs using the Site Audit tool — it shows which pages are accessible for crawling by search engines and which ones are blocked or have technical issues. However, it’s important to understand that Ahrefs does not show whether pages are already indexed by Google — only whether they can be indexed.

In the paid version of Ahrefs, users get full access to Site Audit and can analyze not only their own website but any other domain as well.

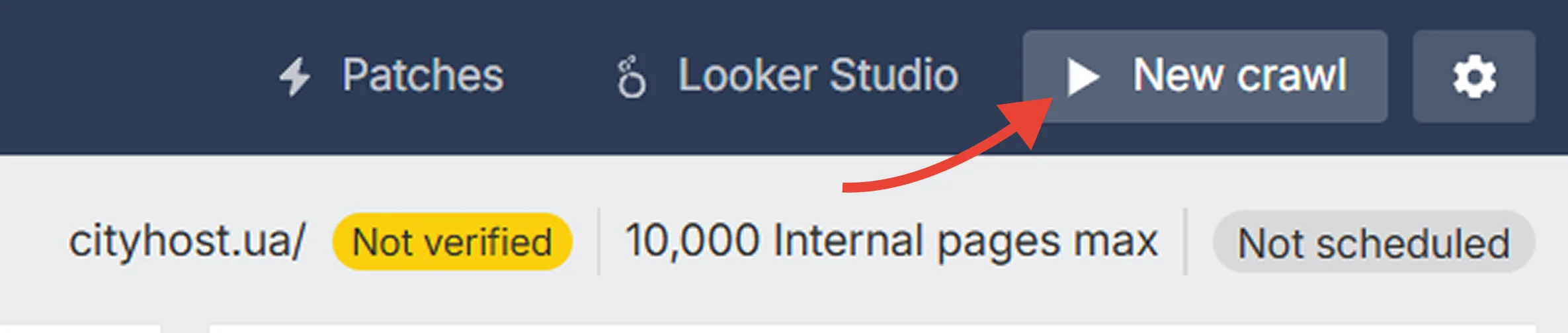

To start the check, go to Site Audit and add your site through New project. You can set up regular automated crawls or launch one manually. The system will crawl all pages, record server response codes, noindex tags, robots.txt directives, canonical links, and generate reports on indexability.

To review pages with technical issues, open the Indexability section. There you’ll see how many pages are available for indexing and the reasons why others cannot be indexed — for example, noindex tags, robots.txt blocking, or 404 errors.

Ahrefs shows that out of 130 checked pages on the website, 127 are available for indexing, while the remaining 3 have a “Non-200” status (these are redirects or error pages).

In the free version — Ahrefs Webmaster Tools (AWT) — functionality is limited. You can connect only your own website (by verifying it through Google Search Console) and perform a basic audit: check page accessibility, crawl statuses, and blocking directives. But, once again, it’s important to note that this tool does not show whether the pages are already indexed in Google.

To find out whether a page is actually indexed, use Google Search Console or the site:yourdomain.com command in Google. It’s best to combine both tools: Ahrefs helps detect technical barriers that prevent indexing, while Search Console confirms the actual indexing status.

In practice, a website’s homepage is usually indexed almost instantly, while new blog posts might take several days. This is normal — Google prioritizes crawling based on domain authority, update frequency, and the number of internal links.

Read also: How to Properly Design a Website Homepage

How to Speed Up Website Indexing

The best strategy is to make the process convenient for search engines. Google should be able to easily find your page, load it quickly, and understand what it’s about. If a website runs smoothly and has a clear structure, new materials can appear in search results within just a few hours.

To speed up indexing, you should take a comprehensive approach:

- Add your site to Google Search Console and submit a sitemap (sitemap.xml) so that the bot receives signals about all new pages.

- Use internal links — they create clear pathways for the bot to navigate between pages, making crawling easier.

- Publish unique and valuable content that stands out from competitors.

- Improve loading speed: optimize images, minimize CSS and JavaScript, and enable caching.

- Earn external links, which help bots find new materials more quickly.

- Update your site regularly, adding new pages or revising old ones — this signals to Google that your resource is active.

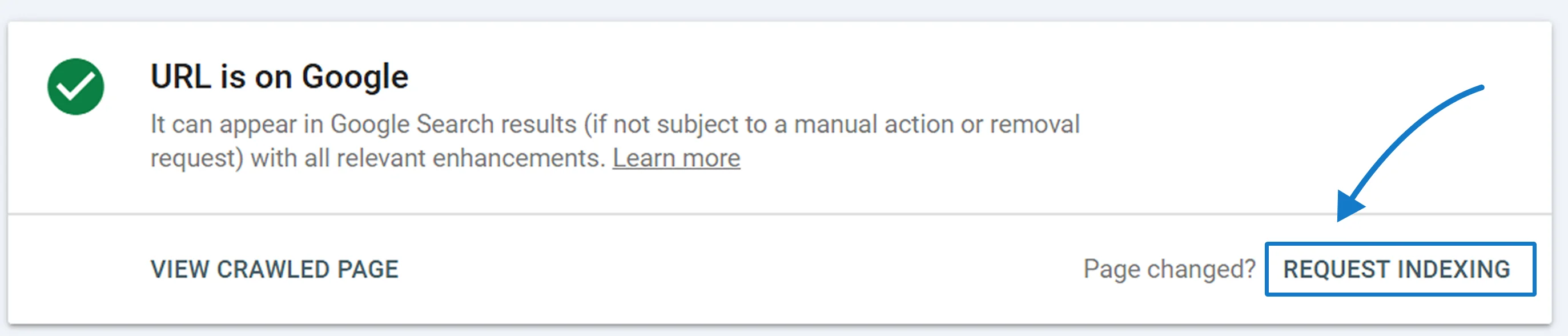

If new material isn’t being indexed, or you’ve updated a page and want the new version indexed as soon as possible, you can request it manually through Search Console. This is especially effective for new domains or pages without external links.

An interesting observation: external links help Google discover new pages faster. The bot frequently visits authoritative websites and follows links from them, so your content gets noticed more quickly. While this doesn’t guarantee instant indexing, it significantly accelerates the discovery phase.

It’s also important to avoid pages with very little text or missing key elements such as headings, meta descriptions, or images. Google indexes complete, well-structured pages with clear content more effectively. And most importantly — consistency: regular updates and technical cleanliness attract crawlers much faster than infrequent large changes.

How to Avoid Indexing Problems

To ensure that your pages consistently appear in search results, you need to build a technically sound and logically structured website. Search engine crawlers should be able to easily find, scan, and evaluate your content. Most issues arise not because of algorithms, but due to technical details — such as blocked sections, duplicate text, or missing internal links.

To avoid these common mistakes, follow a few proven rules:

- Check your robots.txt and meta tags. Make sure important pages aren’t blocked from crawling and don’t contain the noindex attribute.

- Monitor content uniqueness. Google ignores duplicate materials, so your texts must be original and valuable.

- Maintain a logical site structure. Internal links help Googlebot navigate between pages and discover new content.

- Keep your sitemap (sitemap.xml) updated. It signals to search engines when new sections or articles appear.

- Monitor loading speed. Slow pages reduce crawl efficiency — Googlebot has a limited crawl budget.

Stable indexing isn’t a coincidence; it’s the result of consistent work. If your site is regularly updated, technically clean, and has clear navigation, search engines will visit it more often, ensuring that new pages are indexed quickly.

When to Block a Website from Indexing

Sometimes a website owner may not want certain pages to appear in search results — for example, if it’s a test version of the site or an internal company section.

The simplest option is to use the robots.txt file, where you can disallow crawling of specific directories. Another method is to add the following meta tag to the page’s code:

<meta name="robots" content="noindex, nofollow">

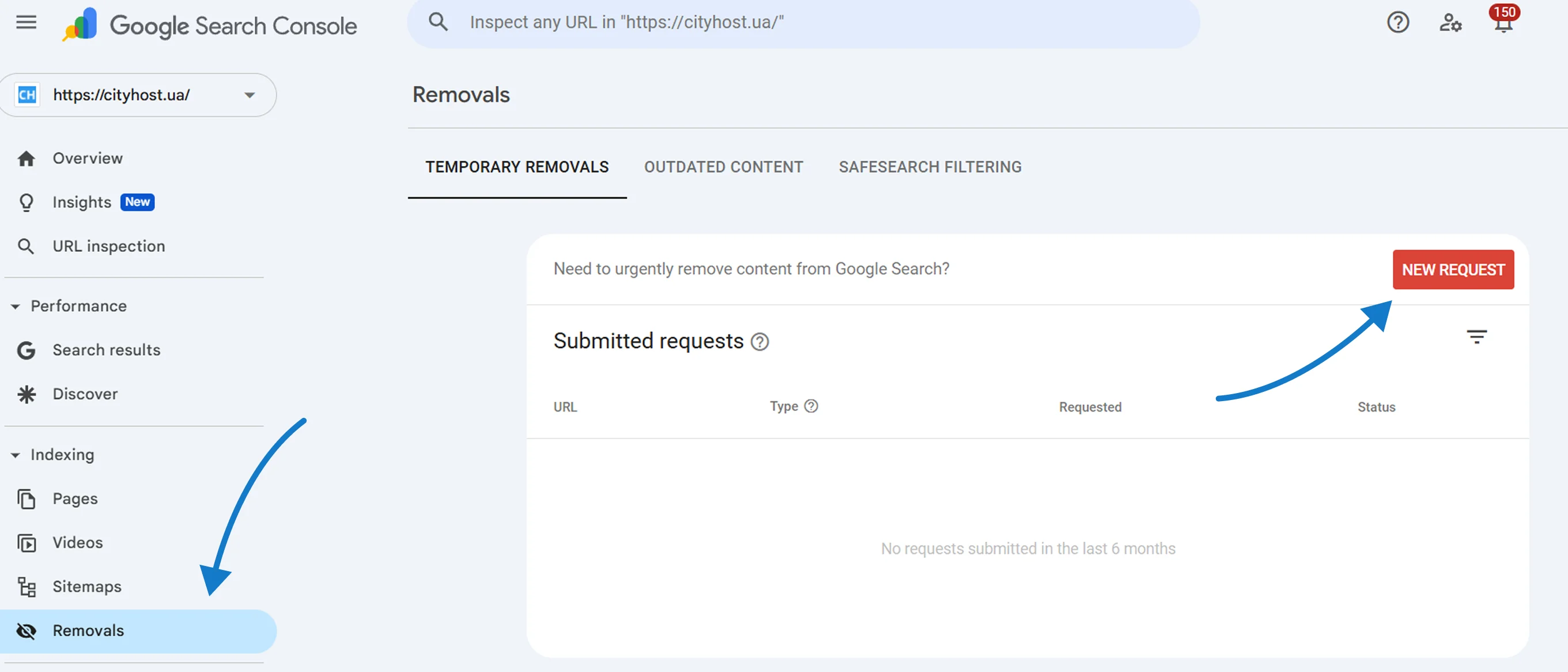

You can also remove a page from search results using the “Removals” tool in Google Search Console. To do this, go to Indexing → Removals and add the page’s URL.

This will temporarily remove the page from search results for six months. For permanent removal, you need to either add the noindex tag or delete the page from the site entirely.

Read also: How to hide a site during development from indexing search engines and from users

Why a Page Is Not Indexed

Sometimes even a high-quality page takes a long time to appear in search results. This isn’t always a developer’s mistake — sometimes Google simply doesn’t see enough value in the content or can’t process it correctly.

- If a page doesn’t contain unique content or duplicates parts of another page, the search engine may ignore it. Google prioritizes pages that genuinely help users — those with a clear structure, fast loading speed, and original material.

- Another common reason is page isolation. If there are no internal links leading to it, the bot might not be able to reach it. That’s why it’s important for all new materials to be interconnected through menus, “related articles” sections, or the sitemap.

- If everything seems correct but the page still isn’t indexed, check its status in Google Search Console. Sometimes the system displays the message “Crawled — currently not indexed”, which means the page has been scanned but not yet added to Google’s database. In such cases, updating the content, improving loading speed, and adding a few internal links can help.

The main rule is simple: a page will be indexed only if it is unique, accessible, and useful. If even one of these conditions isn’t met, the search engine sees no reason to include it in the results.

How Hosting Affects Website Indexing

The speed and stability of your hosting provider are among the key technical factors that directly influence how frequently your pages are indexed. If a server responds slowly to requests or frequently goes down, some pages may remain outside the index.

Search engines have a limited crawl budget — the number of pages they’re willing to crawl within a given period. If a website loads slowly or returns an error, the bot stops crawling.

That’s why reliable and fast hosting — with SSD storage, up-to-date PHP versions, caching, and a stable network — helps maintain good speed, preserve crawl budget, and promote faster indexing.

Indexing is the first and most crucial step toward making your website visible in Google. Without it, your content doesn’t exist for search engines — and therefore, for potential customers. Check your page status regularly, optimize loading speed, submit sitemaps, and ensure no technical files block bots. Consistent indexing control is part of a systematic SEO strategy that directly determines your brand’s visibility on Google.